References

Additional Explorations

Related Topics / Labs

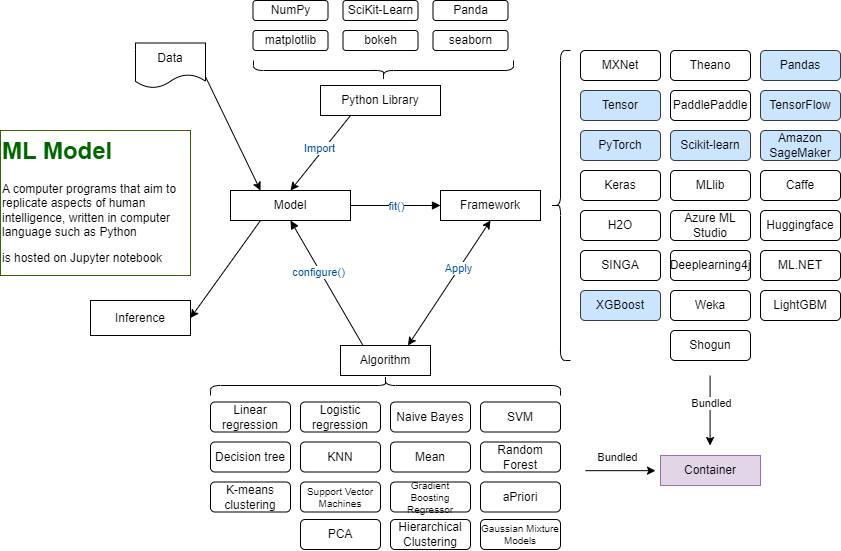

Model:

is a mathematical representation of the learning process. Is a Trained Algorithm used to identify patterns (without rules) in data (trained by ML process) and applied to new data -> prediction

Dataset:

is the data that is collected and used to train, evaluate, and Test the models, collected from many sources, transformed, and pre-processed to use in Machine Learning training. types of data [ Corpus: such as News paper articles]

Data Labeling:

is the target attribute of the data, identifies the value of the dataset related to the machine learning analysis, the label is either provided (supervised), or calculated (unsupervised), a trained model predicts labels on new datasets, Data that is labeled is also called Ground Truth data

Datapoint:

is the coordinate between a feature (x-axis) and a label (y-axis), used to plot a point on the grid (x, f(x))

Algorithm:

Defines the structure of an ML model. Is a set of rules or processes used by an AI system to conduct tasks—most often to discover new data insights and patterns, or to predict output values from a given set of input variables. Algorithms enable machine learning (ML) to learn and uses parameters to tune the results.

Framework:

interfaces that allow data scientists and developers to build and deploy machine learning models faster and easier

Features (embedding):

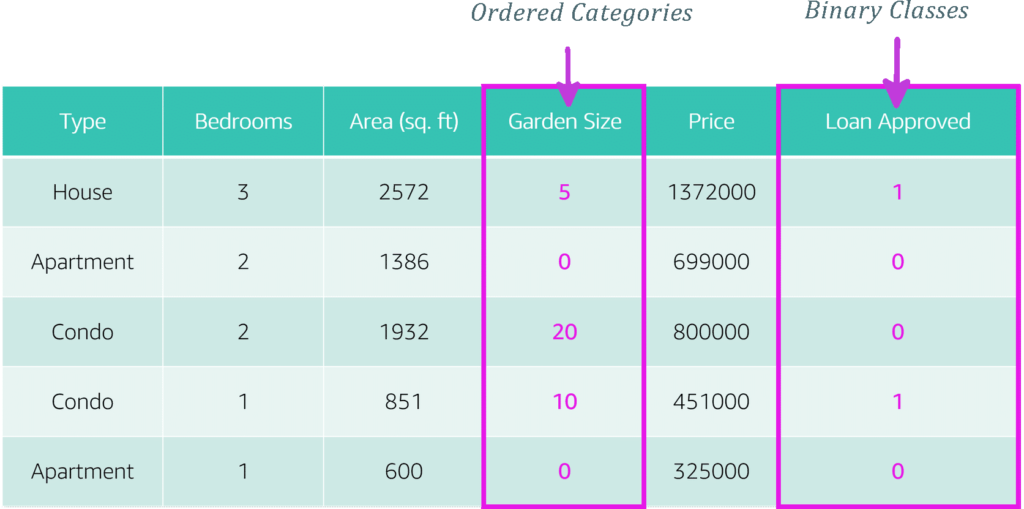

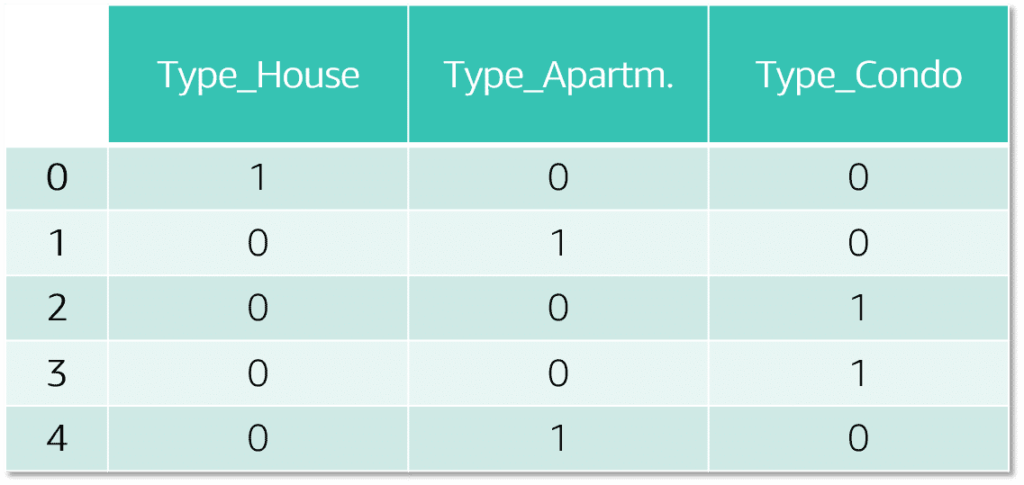

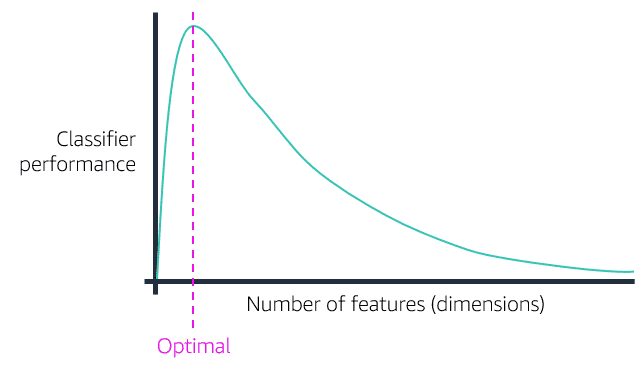

parts of the dataset are important to determine the accuracy of the outcome. named feature column, feature variations in (x-axis), example product color, month of the year, stock market value, Features are either [Categorial:=Quality | Continuous:= Quantity] also referred to as embedding, and the number of features in an observation is the dimension

Observations:

The rows, consists of the values of each feature

Prediction (Target value, or label, Estimate):

estimated calculated value as a result of running the model against the real data named Prediction column (y-axis) ex. sales outcome, member enrollments.

Context

: Weighted features in determining the accuracy.

Where:

x: feature

a: weight – importance

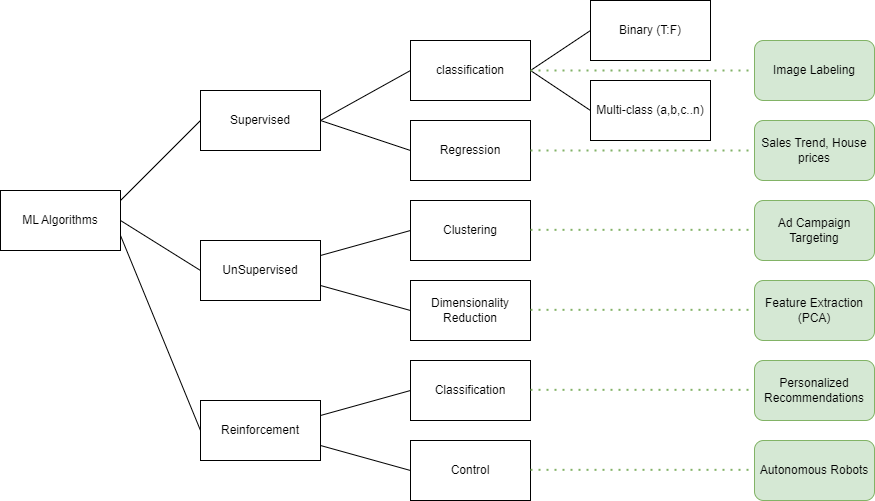

ML Algorithms

-

-

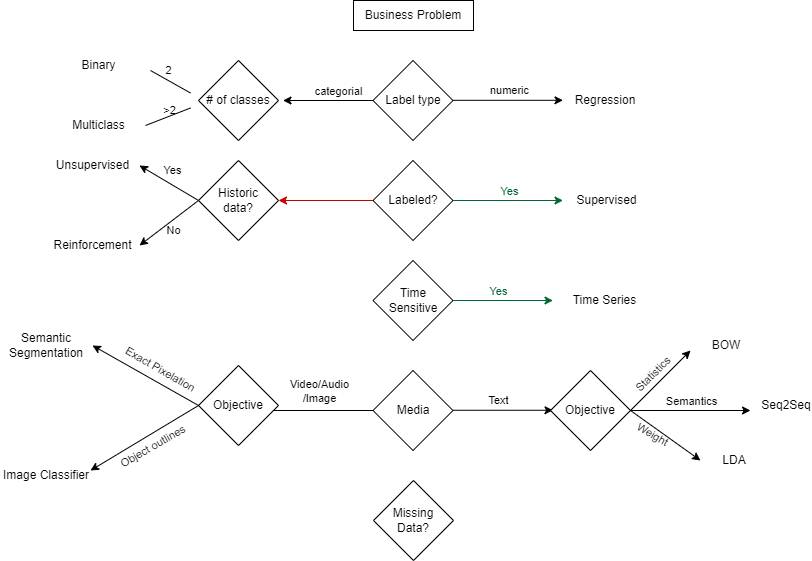

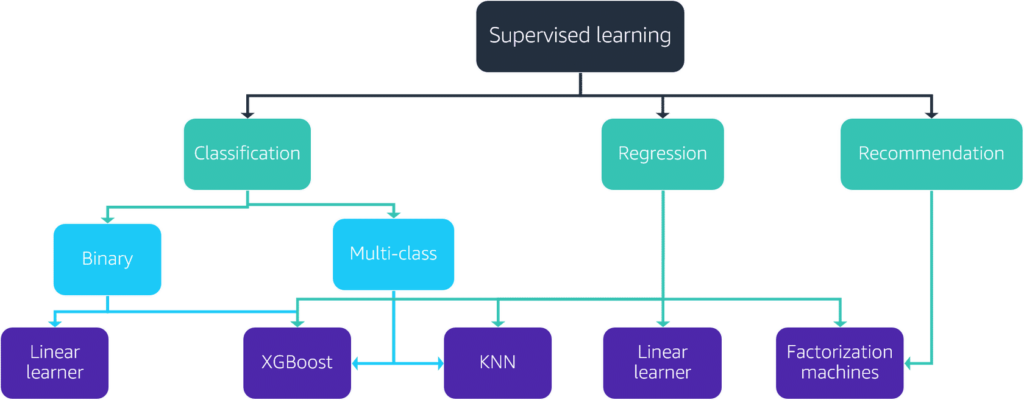

Supervised:

known inputs/outputs generalize future outputs, Teacher show answer, label data, lead by example, types [Classifications [Binary mapping (T : F) | multi-class mapping (a,b,c …,n)] | Regression no mapping jump value]

-

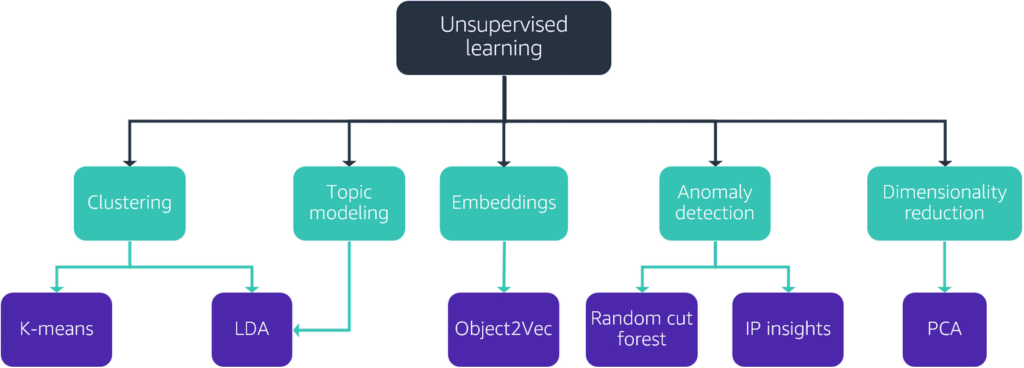

Unsupervised:

Unknown input/outputs, finds patterns, auto create labels -> clustered data by labeling patterns of data anomaly detection Clustering Algorithm, groups data into different clusters based on similarities in features.

Clustering Algorithm, groups data into different clusters based on similarities in features.

Anomaly Detection:

Anomaly Detection:

-

Reinforcement:

Interacts with the environment, learn to take actions, maximize rewards, continuously improve by feedback from previous (trial/Error) reward/penalty for actions by the agent(driver)

-

Deep Learning:

Based onartificial neurons, Artificial Neural Network (ANN) AI -> rules provided by programmer ML-> Analyzing patterns DL->like human, given basic rules, then iterative complex approach over multiple (100s) of layers and recognize patterns more complex than ML Each layer summarizes and feeds information to the next layer, ultimately producing final output

Each layer summarizes and feeds information to the next layer, ultimately producing final output

Deep Learning Computer Vision

ILSVRC ImageNet Large Scale Visual Recognition Challenge

Deep Learning Computer Vision

ILSVRC ImageNet Large Scale Visual Recognition Challenge

-

ML Model

Program file python.py

# this is comment line

import boto3

#Variables

var = "String variable"

var2 = 'String "Variable"'

age=32

price=5.99

list = ['item1","item2","item3"]

dictionary = {"key1":"value1","key2":"value2"...}

# A function that prints hello world

def hello_world():

print('hello world')

return "Welcome !"

# This line calls (runs) the function

# indentation define code level 0-main, and so on,

greeting = hello_world()

print(var, var2, age, var2 + str(age), list[1], dictionary["key1"],greeting)

About DLAMI and Jupyter Notebook Setup

-

Identify DLAMI & Launch Instance

Deep Learning Amazon Machine Image,$ aws ec2 describe-images --region us-west-2 --owners amazon \ --filters 'Name=name,Values=Deep Learning AMI (Ubuntu 18.04) Version ??.?' 'Name=state,Values=available' \ --query 'reverse(sort_by(Images, &CreationDate))[:1].ImageId' --output text ami-0a2b85e15b7c0ac34 <-- Ubuntu image -

Configure and Install environments:

Conda is environment management tool that allows creation and use of isolated environments for ML work$ sudo yum -y update $ conda env list <--lists all possible environments $ source activate <env> ex. tensorflow2_p310 $ conda deactivate $ nvcc --version <-- Install if doesn't exist $ jupyter notebook password <-- setup password for Jupyter Notebook /home/ubuntu/.jupyter/jupyter_notebook_config.json $ mkdir ~/ssl && cd ~/ssl $ openssl req -x509 -nodes -days 365 -newkey rsa:2048 -keyout jupyter.key -out jupyter-cert.pem $ jupyter notebook --certfile=~/ssl/jupyter-cert.pem --keyfile ~/ssl/jupyter.key <-- Ctrl-C to shutdown, listen on localhost:8888 -

Connect to Jupyter Notebook Server Putty or SSH

AWS ML Tools

-

Jupyter Notebook: Document Repository and runner

-

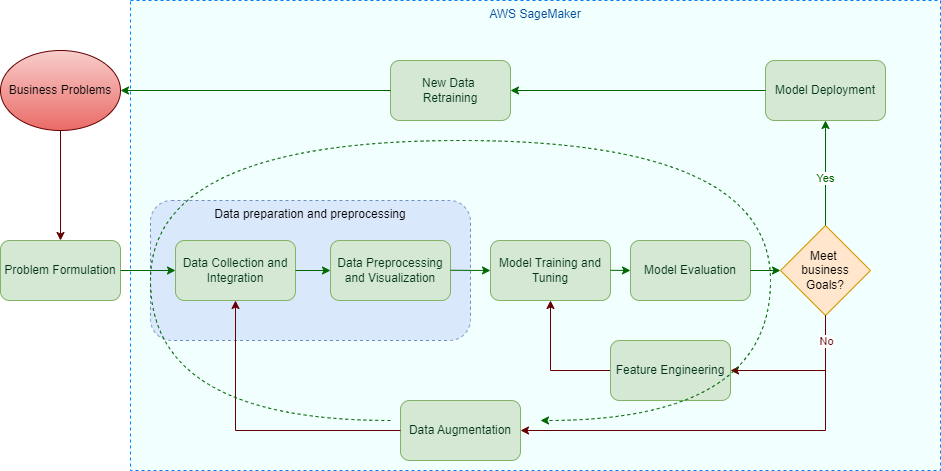

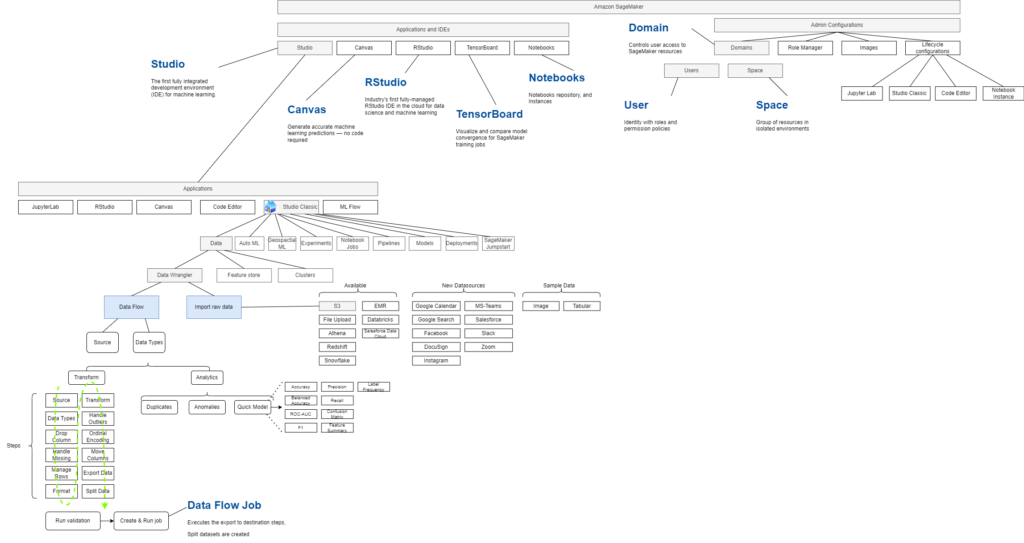

SageMaker: Fully managed service to ML pipeline

-

BedrockBedrock

ML Frameworks & Libraries

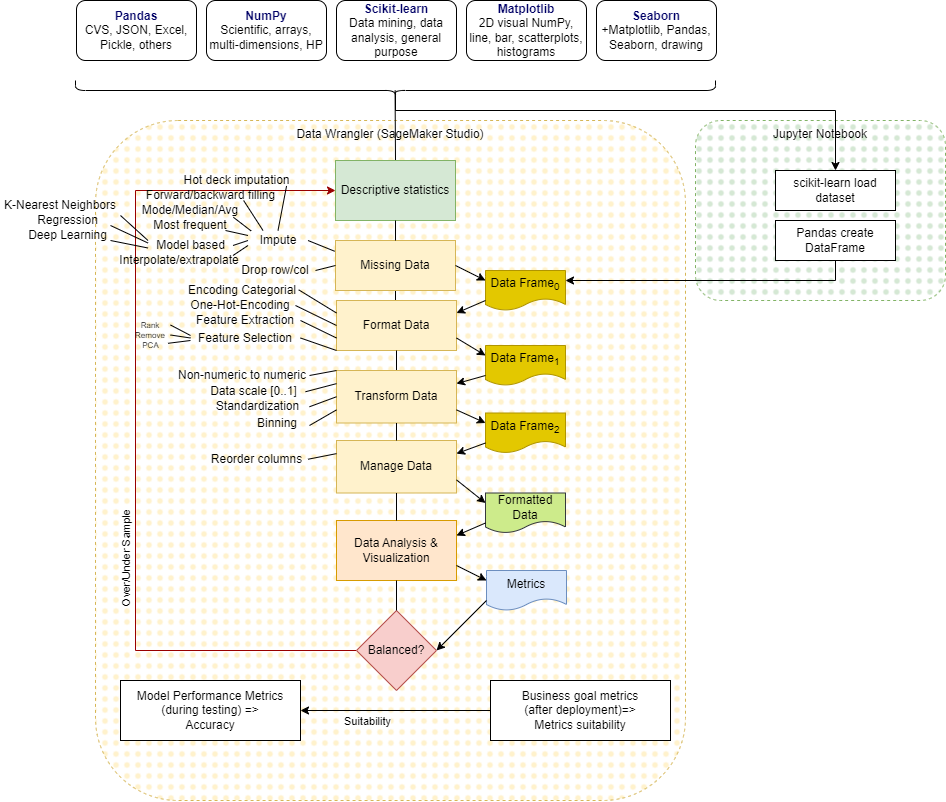

- Pandas: Transforms unstructured data into rows and columns in tabular format called “Data Frames” consists of series (columns hold any data types) and indexes. Used to drop missing values

- NumPy: Formatting multi-dimensional arrays

- Scikit-Learn: Data mining and analysis, used to input missing values [ mean, median ]

- Matplotlib: Data Visualization

- Seaborn: Data Visualization integrated with Pandas

Setup the environment

- Download and install Python (Windows option)

- Install AWS CLI v2

- Install VSCode and python extensions AWS Toolkit

- Virtual Environment

$paython -m venv my_venv $source my_venv/bin/activate | C:>source my_venv/Scripts/activate PS>.\my_venv\Scripts\Activate.ps1 deactivate <-- exit my_venv - VSCode terminal -> install python libraries

$ sudo apt install python3-pip $ pip3 install [boto3|numpy|pandas] $ pip freeze > requirements.txt $ pip install -r requirements.txt - Configure AWS credentials

$ aws configure

High level vs Low level API calls

import boto3

# low level client map 1:1 to API calls

# dynamodb = boto3.client("dynamodb")

# high level simplified

dynamodb = boto3.resource('dynamodb')

labCustomers = dynamodb.Table('LabCustomers')

def lambda_handler(event, context):

print(event)

id = event["queryStringParameters"]["id"]

firstname = event["queryStringParameters"]["firstname"]

lastname = event["queryStringParameters"]["lastname"]

####

# Challenge: put the customer id, firstname, last name into the dynamo db table

####

# Your code goes here

# low level client calls

# response= dynamodb.put_item(

# TableName="LabCustomers",

# Item={

# "ID": {"S":id},

# "Firstname": {"S": firstname},

# "Lastname": {"S": lastname}

# })

# high level resources call

response= labCustomers.put_item(

Item={

"ID": id,

"Firstname": firstname,

"Lastname": lastname

})

return {"result": "Saved"}Train the model commands

# Install Libraries (preinstalled on Sagemaker studio)

%pip install sagemaker boto3 pandas [ s3fs | "s3fs<=0.4" ] <-- if older pandas

%reset -f <-- restart the kernel

# Install dependencies

import boto3

import pandas as pd

import sagemaker

:

# Load data

df = pd.read_csv("s3://<bucket>/<prefix>/<object>.csv")

# Set up the TrainingInput objects

train_input = TrainingInput(train_path, content_type='text/csv')

validation_input = TrainingInput(validation_path, content_type='text/csv')

# Print data

df

# Configure the estimator

xgb_model = sagemaker.estimator.Estimator(

image_uri = container,

role = role,

instance_count = 1,

instance_type ='ml.m5.xlarge',

output_path = output_path,

sagemaker_session = sagemaker_session,

rules=[

Rule.sagemaker(rule_configs.create_xgboost_report())

])

# Configure Hyperparameters

xgb_model.set_hyperparameters(

max_depth = 5,

eta = 0.2,

gamma = 4,

min_child_weight = 6,

subsample = 0.7,

verbosity = 0,

objective = 'binary:logistic',

num_round = 800)

# Run the training job

xgb_model.fit(

{

"train": train_input,

"validation": validation_input

},

wait=True) <-- Takes time

# Evaluate the model

%%capture

time.sleep(500) <--in ms, takes between 3-8 minutes

rule_output_path = xgb_model.output_path + "/" + xgb_model.latest_training_job.job_name + "/rule-output"

! aws s3 ls {rule_output_path} --recursive

! aws s3 cp {rule_output_path} ./ --recursive <-- Takes time wait until report is generated

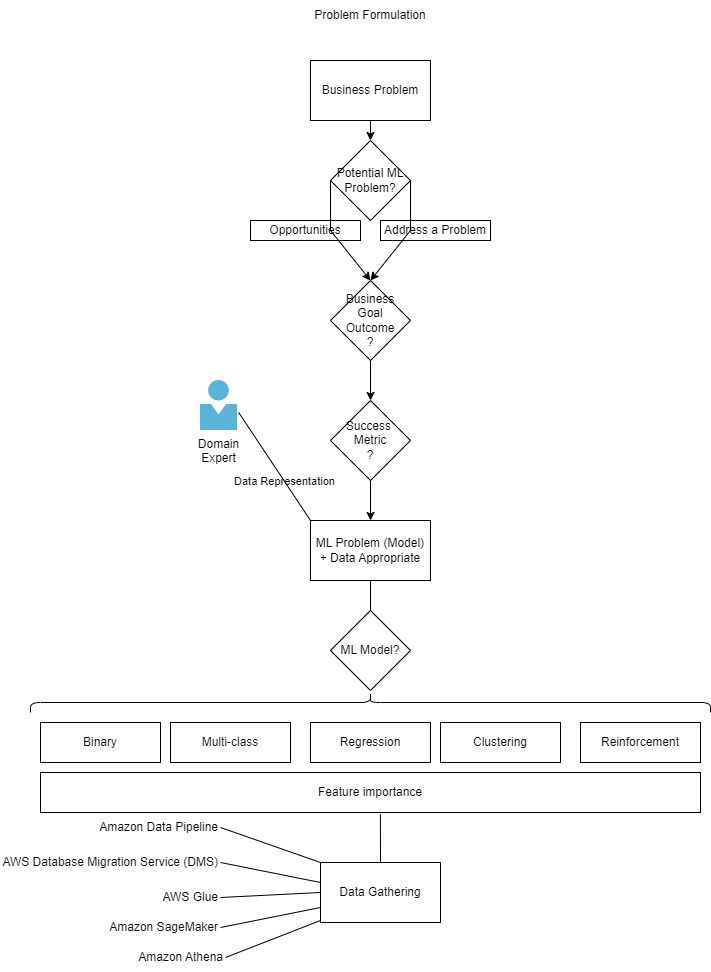

Problem Formulation

-

defining a business problem

such as inaccurate demand prediction -

identifying the business goal or outcome

such as keep inventory low, -

Develop quantitative metrics

used to measure success, Model performance metrics known as accuracy, such as monthly unsold inventory less < 15% vs Business goals metrics on deployed model in real-world to identify inappropriate performance metrics - Convert to ML Problem such as each product inventory matches the sales figures.

- Choose the ML model such as regression model

-

Understand the data:

Access to the data, amount of data needed, the desired solution, the data centralized repository. -

Domain Expert:

When actual is higher than ML observations -

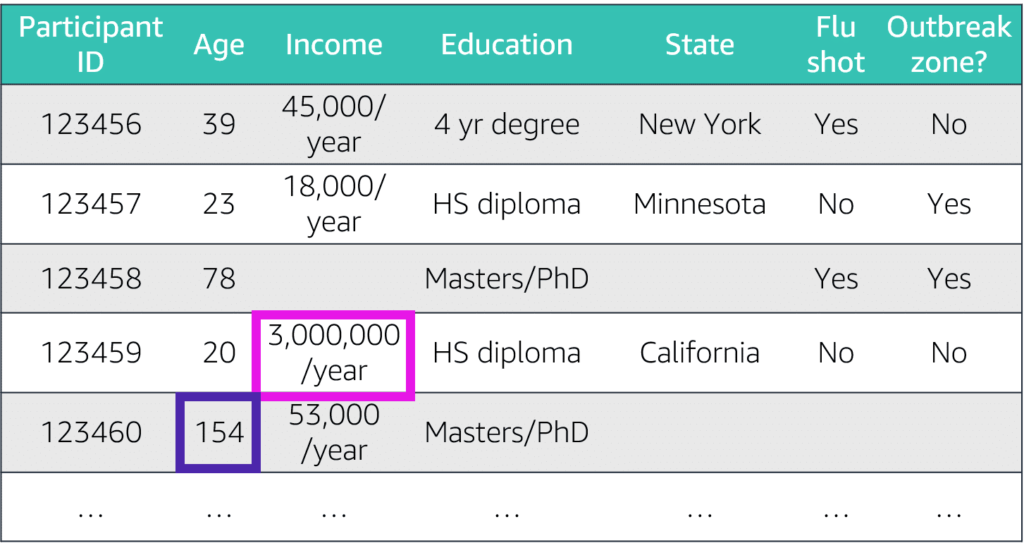

Data Quality:

Identify missing data figures -

Identify features and label data

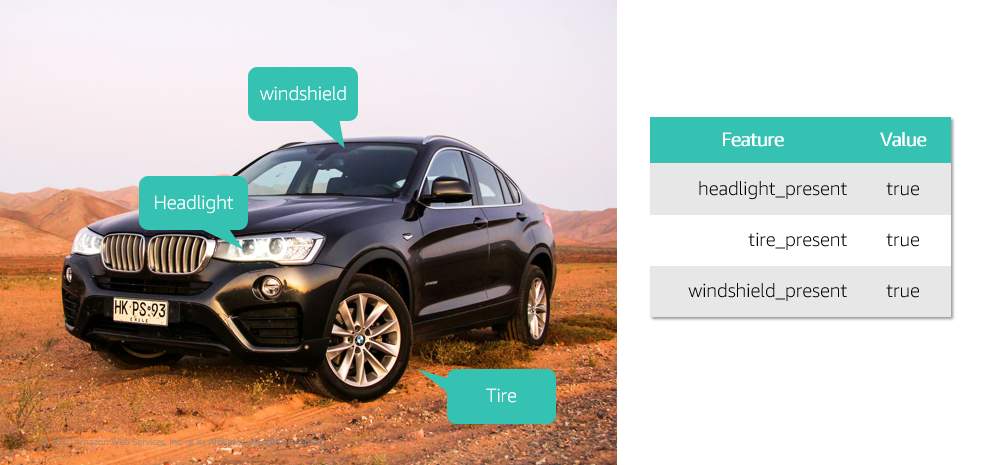

A feature is an attribute that can be used to help identify patterns and predict future answers such as Credit Card (#, Transaction date), Car (doors, style, color) Labeled data is data for which is known already as a fact, such as Credit Card (fraud, not fraud), Car (black, sedan, 4 wheels)

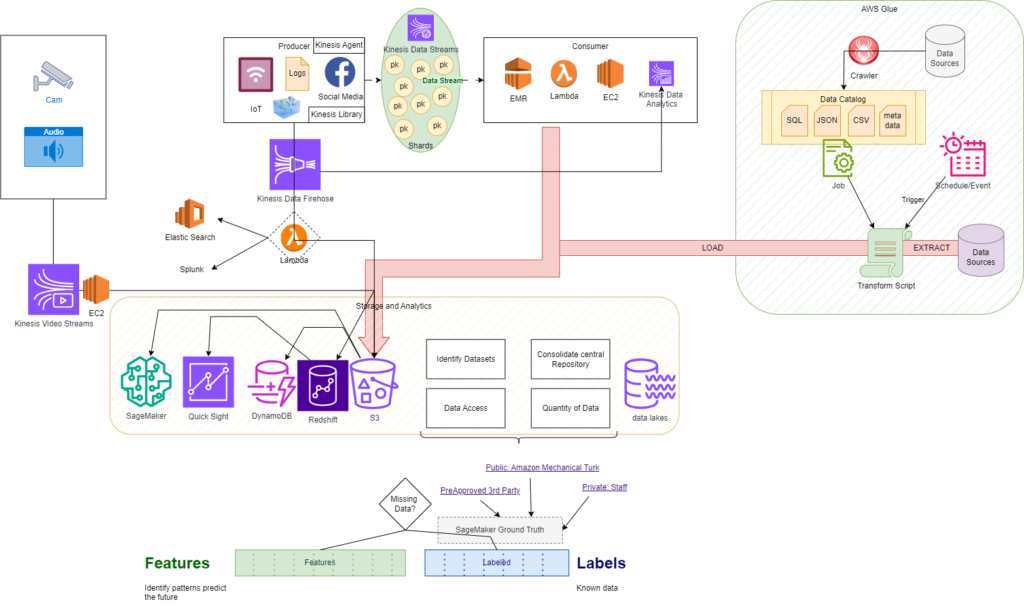

Data Collection and Integration

Ensure your raw data is in one central, accessible place:

Data Collection

Data Lakes:

Solid Foundation single source of truth collect all types of data [Structured, Unstructured] from various sources [Databases, File systems, Disk storage, etc], ideally stored in S3 buckets, retrieval can be optimized with EFS or FSx for Luster

AWS Kinesis Data Stream:

Max 1000 shards, 1MB/s in, 2MB/s out (enhanced per consumer), stores data up to 7 days

AWS Kinesis Firehose:

GB per sec, Transform data with Lambda, pre-built blueprints for common sources, Pay $$$ per volume

Amazon for Apache Flink:

Managed service to process big data GB/s, streaming data in seconds, stateful processing, support for ML algorithms

AWS Glue:

With Glue Crawler create meta-data repository (Data catalog) to allow running jobs on demand or scheduled to ETL data, processes Scala and PySpark codes, Transforms DataRecords in DataFrames in schema aware CSV file UTF-8 without BOM (Byte Order Mark), with 2 leading columns labeling_set_id, and label, unmatched labels assigned a new unique label

Amazon EMR:

Elastic Map Reduce, cluster-centric workload process and analysis Apache Spark or Hadoop big data sets, A cluster consists of Primary – Manage the cluster , Core – runs tasks and store data HDFS, and Task nodes – run tasks only

AWS Data Exchange:

Subscribes to 3rd party data providers in the AWS Marketplace and processes datagrams.

SageMaker Processing:

Processes data PySpark and Scala

Lake Formation:

Uses AWS Glue crawlers to create analytic data lakes on S3 with fine-grained permissions similar to RDBMS permissions. Uses ML algorithm to clean and classify the data. FindMatches transform capabilities unrelated datasets by id.

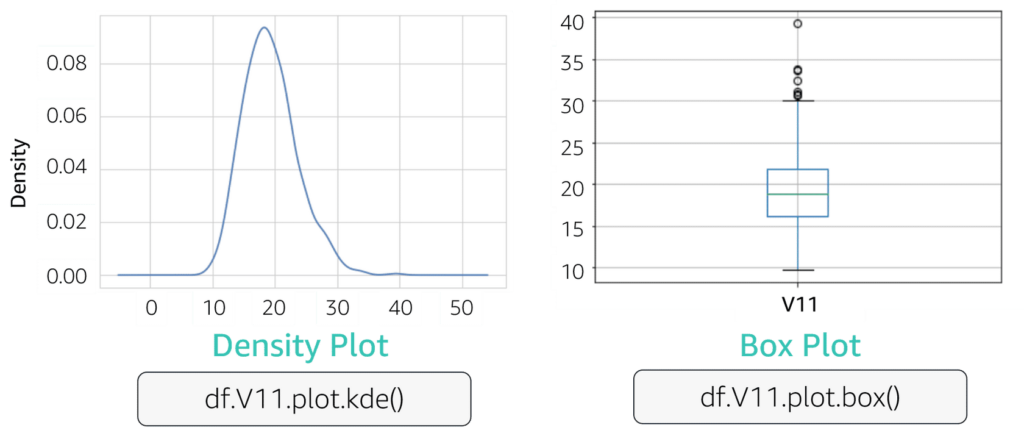

Data Preprocessing and Visualization

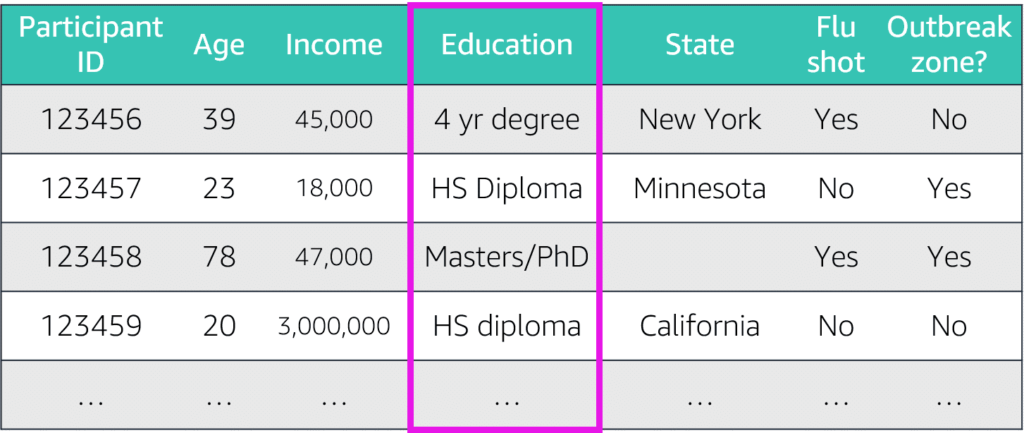

Data Cleaning:

Eliminate inconsistent data (language, format, unit of scale)

Data Preprocessing

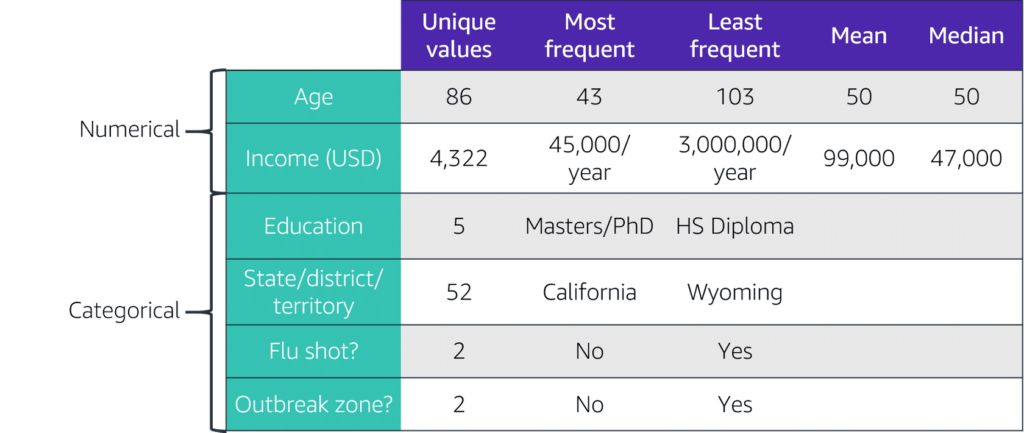

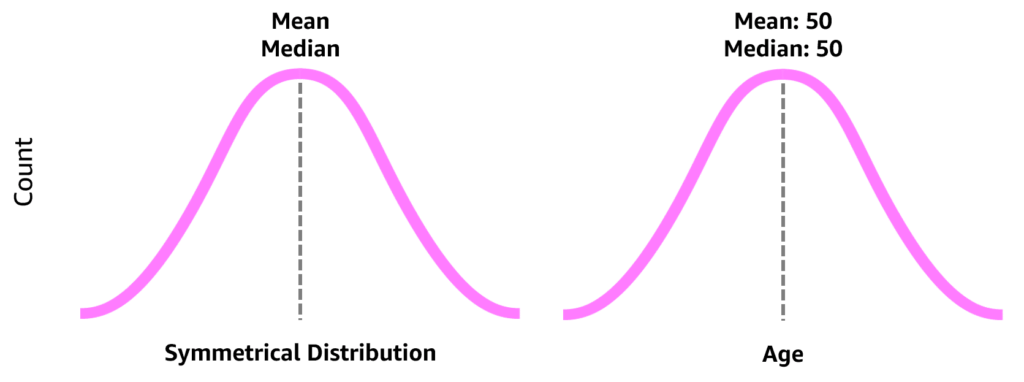

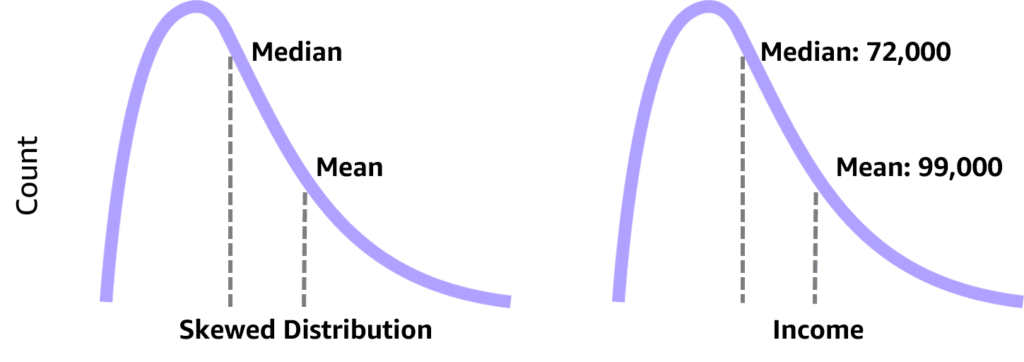

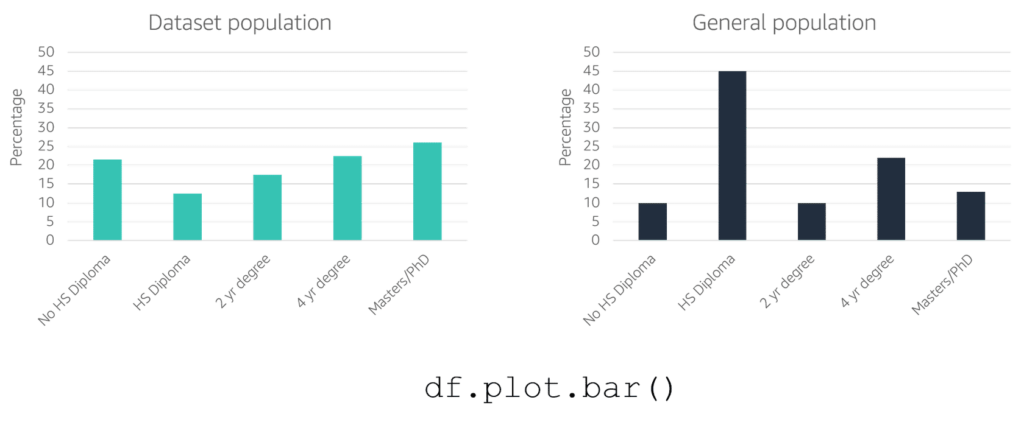

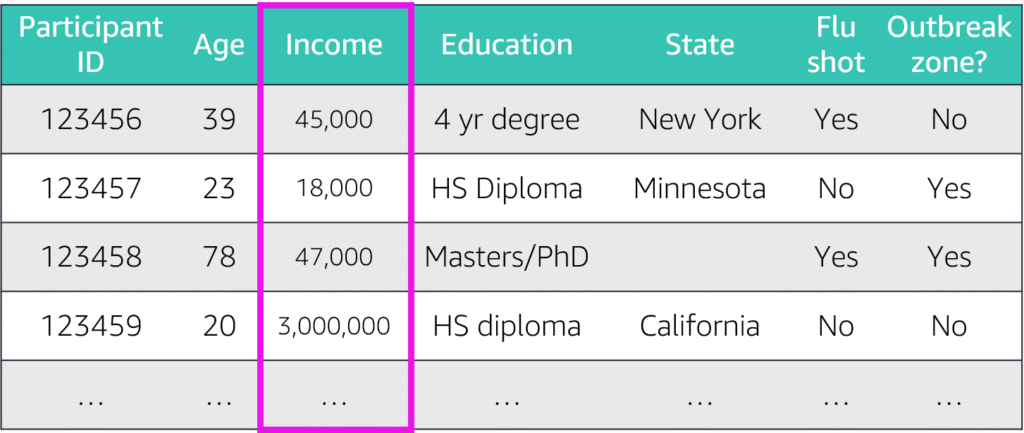

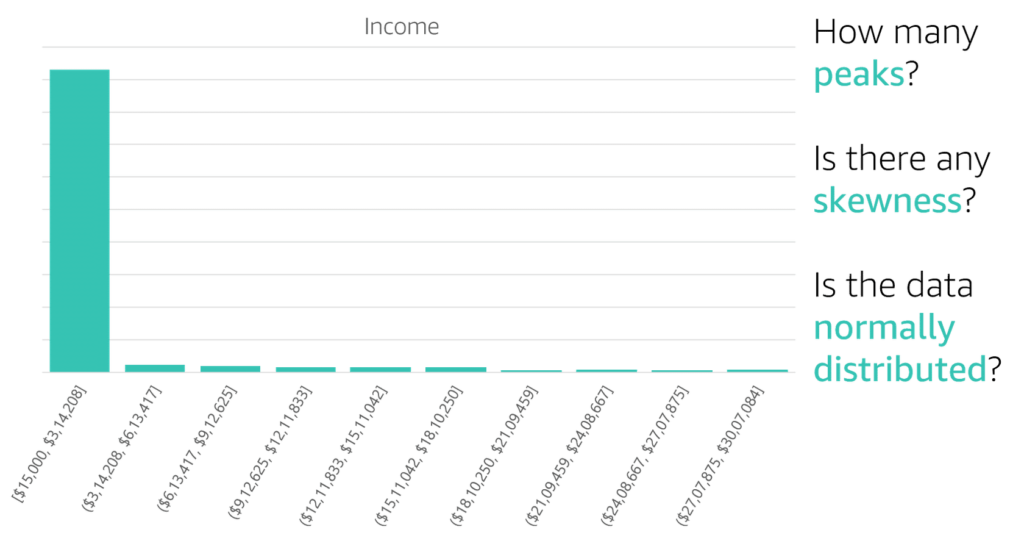

Descriptive Statistics

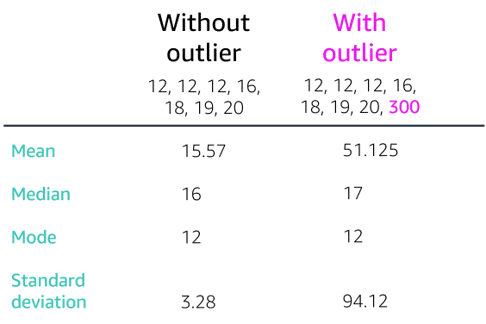

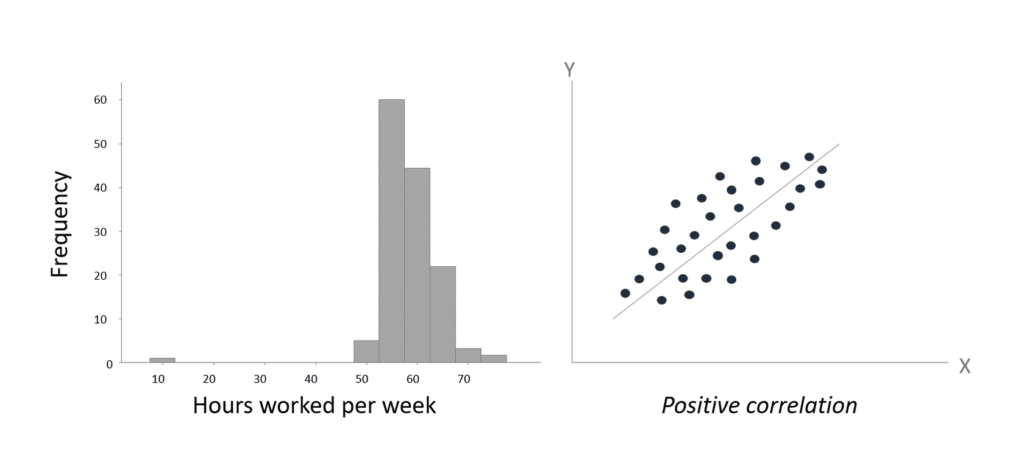

Looks into dataset to discover imbalanced data, use of mean for symmetric data distribution and median for asymmetric data distribution, eliminate outliers data spots (artificial or natural), drop missing data [rows:= not enough samples – overfitting | columns:= underfitting – missing features] or imputing missing values with [ mean | median ]

Medians Is the middle number in a sorted ascending or descending list of numbers and can be more descriptive of that data set than the average, arrange the data points from smallest to largest. If the number of data points is odd, the median is the middle data point in the list. If the number of data points is even, the median is the average of the two middle data points in the list. Used to calculate quarterlies

Where:

q:=quarter i:=1,2,3;

n:= number of samples;

Q:= The order position of the quarterly in the dataset [Q]

Data Visualization

Transform raw data into an understandable format and extract important features from the data

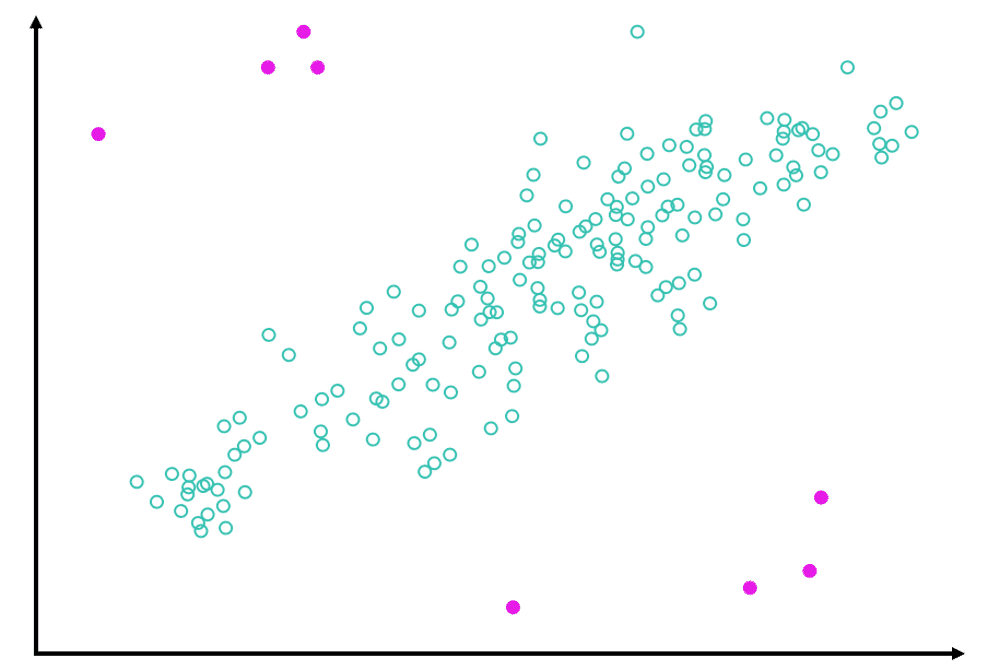

Outliers:

are an abnormal distance from other values, skew values away from others, could be indication that data belongs to another column need to be cleaned, but also could add richness to the dataset, and can make it harder to predict

-

- Natural outliers: is not the result of some artificial error but instead is reflective of some truth in the data.

- Artificial outliers: are caused by artificial errors, such as data collection errors

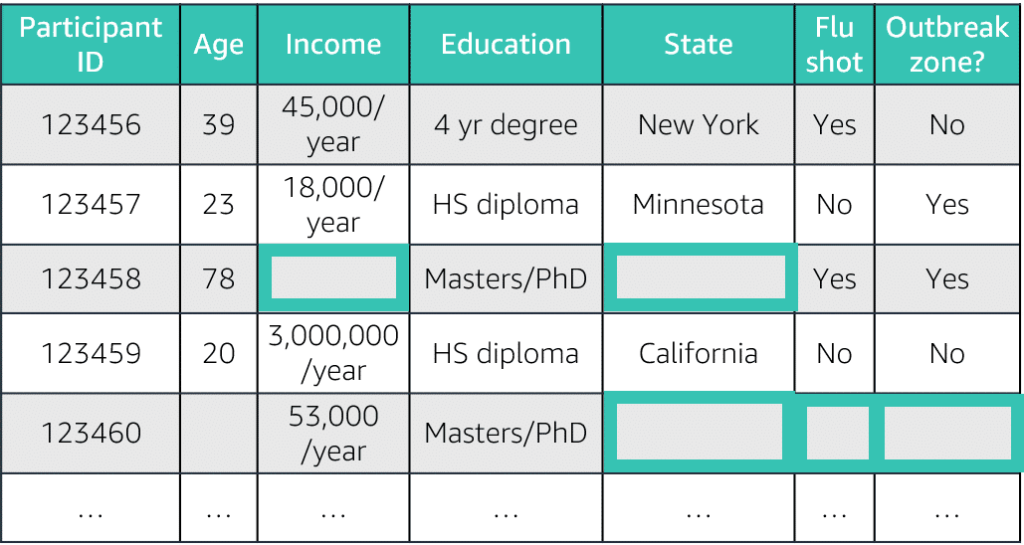

Missing Data

Is data that is missing due to collection errors, requires compute by human to fill the missing data. Missing data causes problem in building relationships if the missing data is huge,

Use isnull() function in Pandas ex. df1.isnull().sum()

Drop row/columns use dropna()

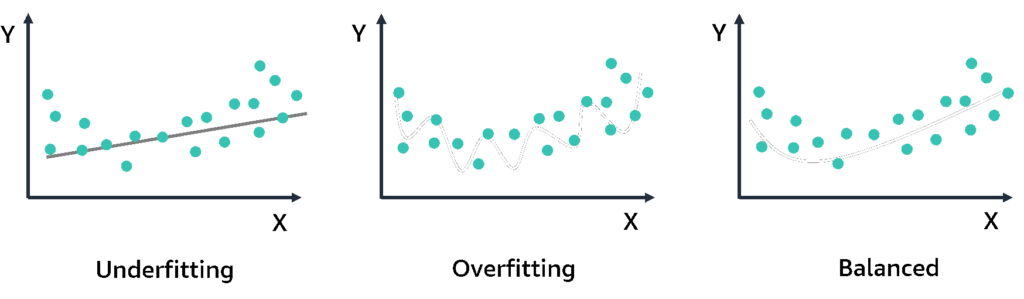

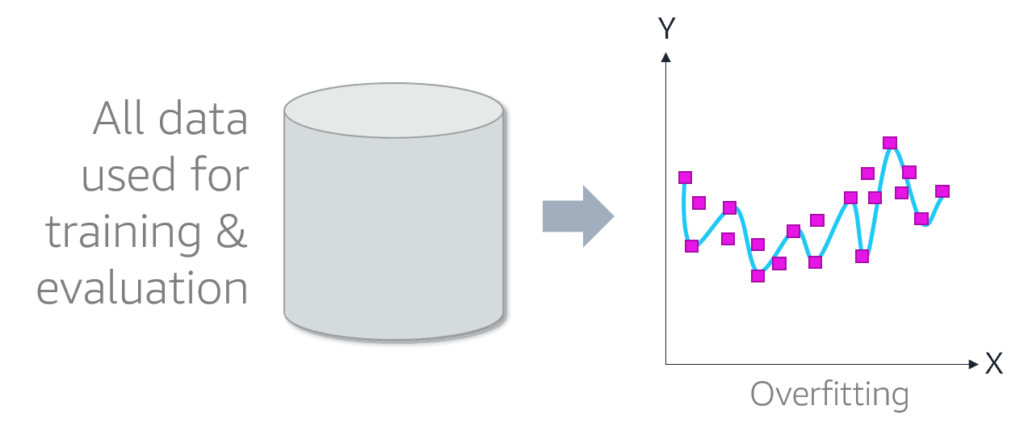

Overfitting:= The model performs good on training dataset, poorly (not enough) on Evaluation data,

Underfitting:= lose information in features

Missing Data Strategies:

- Do nothing: missing values are marginal or algorithm dependent

- Drop missing row/col: small %

- Mean/Median/Avg: numeric data only, not accurate, column level no correlations

- Most Frequent/constant: introduces bias, ignore correlations

- Forward/Backward filling: succeeded or superseded values

- Interpolation/Extrapolation: from other observations within the rage of a discrete set of known data points

- Hot Deck Imputation: by respondent

- Model Based:

- K-NN K-Nearest Neighbor: impute mean value, then measure closest neighbors, sensitive to outliers, slow

- Regression

- Deep Learning (datawig): categorial, uses Deep Neural Network DNN, slow, most accurate , non-correlations

- Multivariate Imputation by Chained Equation (MICE): impute multiple values for missing data

Data Visualization

- Identify Patterns

- Find corrupt data

- Identify Outliers

- Find imbalances in the data

-

Multivariate stats

-

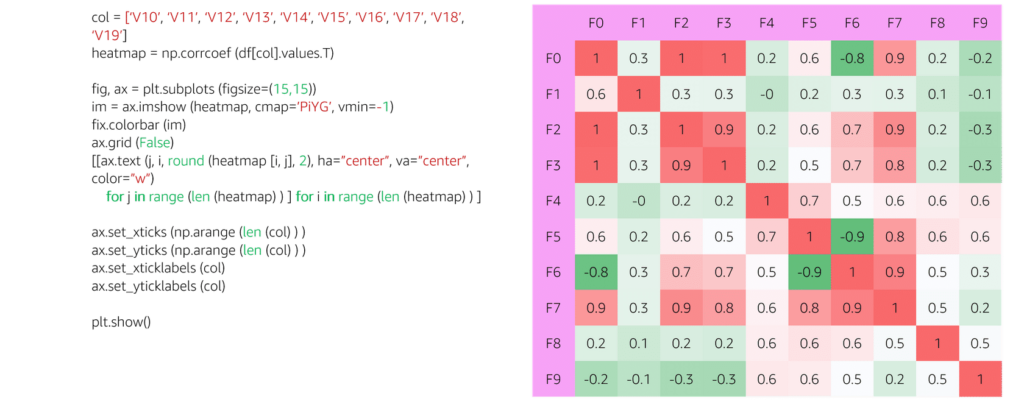

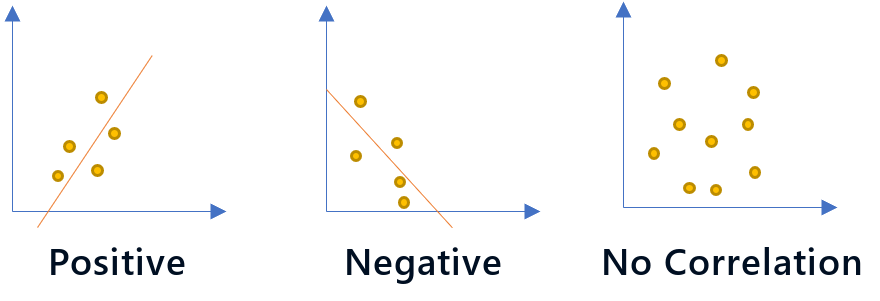

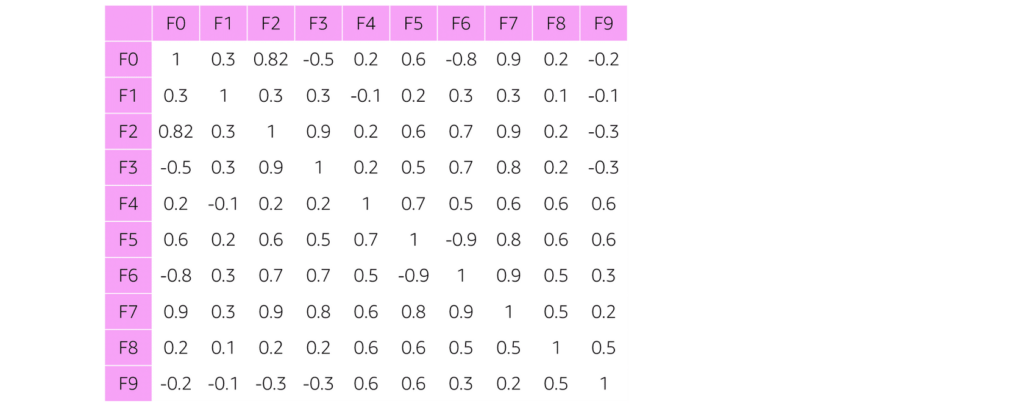

Correlations

High correlations between attributes leads to poor model performance -

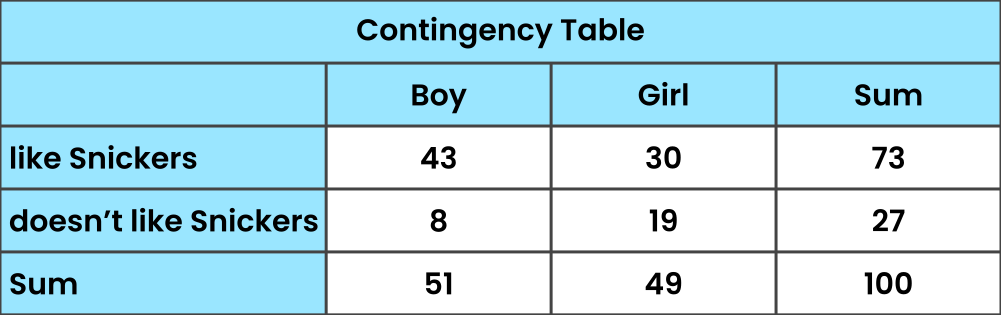

Contingency Table

Organize information. A contingency table gives an overview of how much you’ve got of at least two different things at the same time. It can also be presented in a Venn diagram -

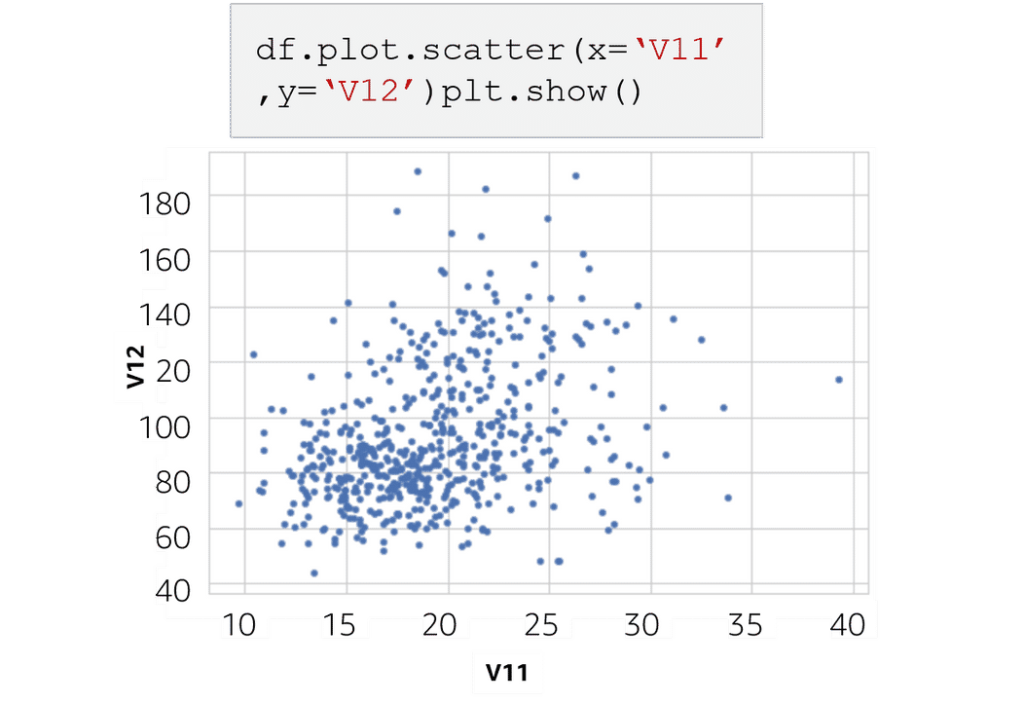

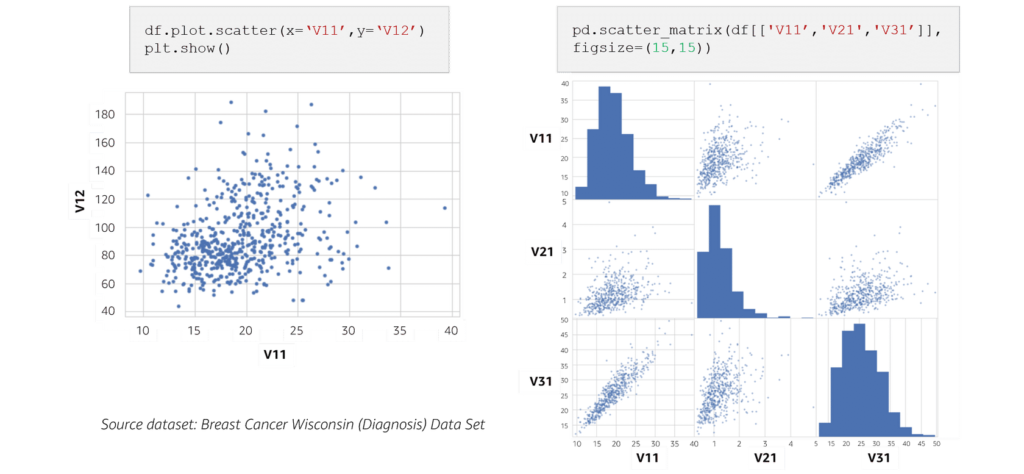

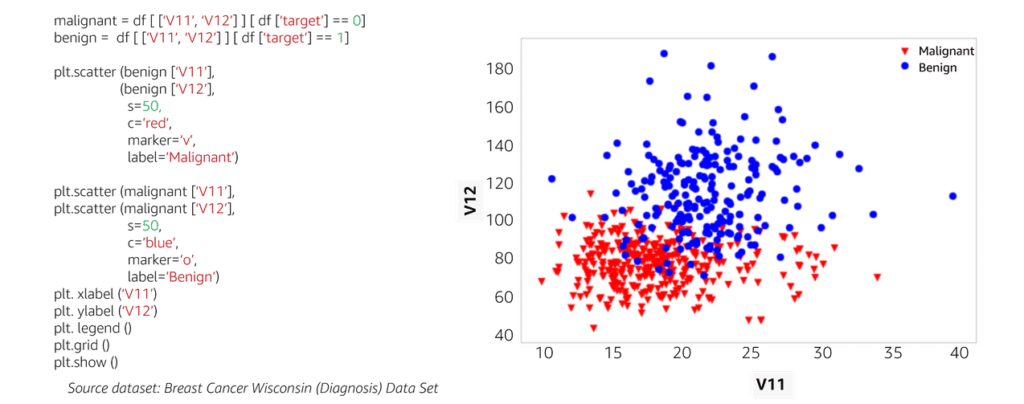

Scatterplot

Scatterplot matrix shows relationships between two or more numeric features in the dataset Scatterplot with identification shows the relationship between attributes in different colors -

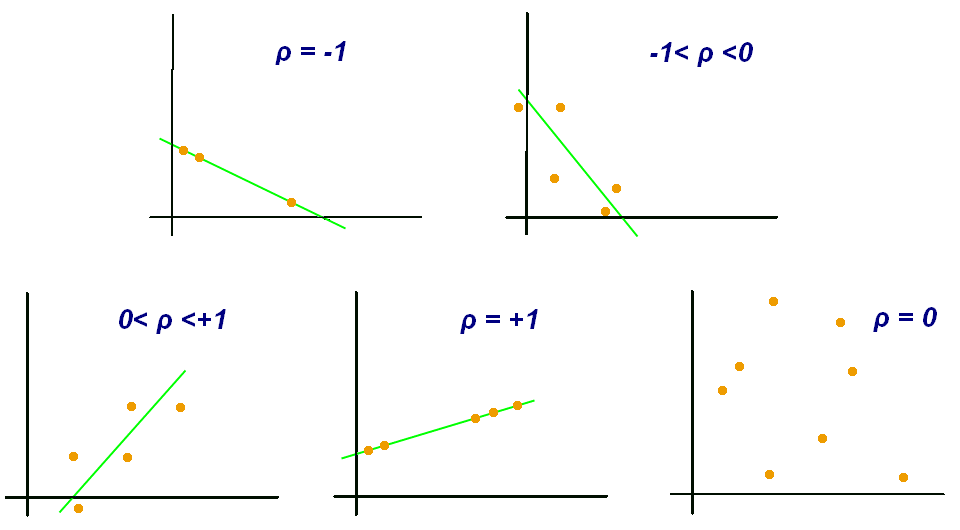

Correlation matrix

Correlation matrix quantifies linear relationships convey strong(1) | weak(-1) relationships Correlation heat matrix identifies strong and weak relationships with color codded (GREEN,RED,YELLOW) with shades

-

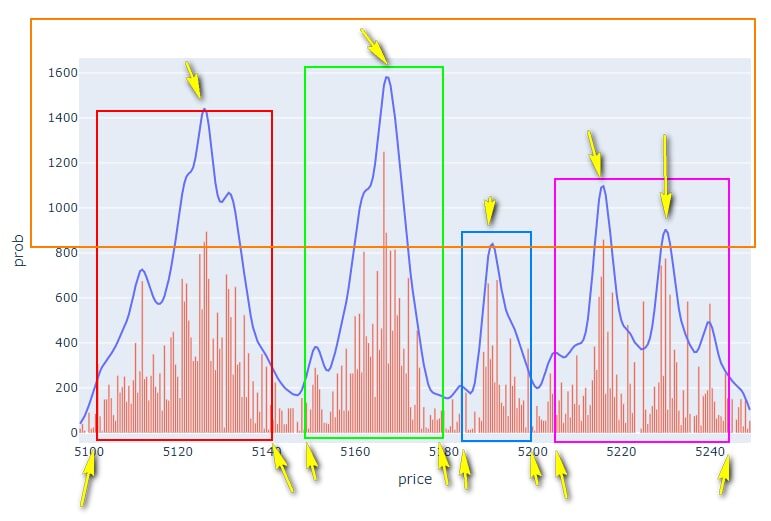

Exploratory Data Analysis:

- KPI, such as Net Performer Score (NPS), Customer Profitability Score (CPS), Conversion Rate

- Clustering or grouping: distribution of data over defined intervals (not time)

- Histograms: one variable value

- Scatter charts: two variables related, size represent the value

Model Evaluation

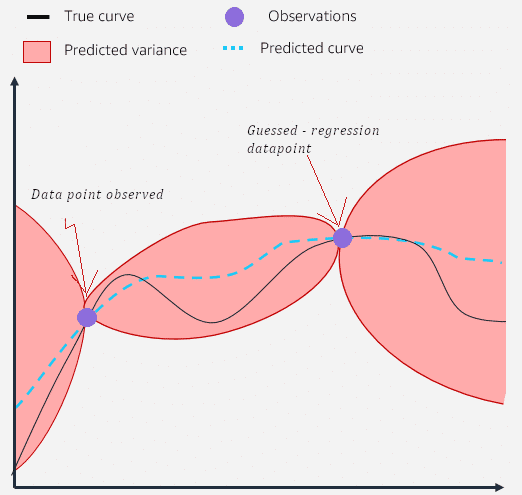

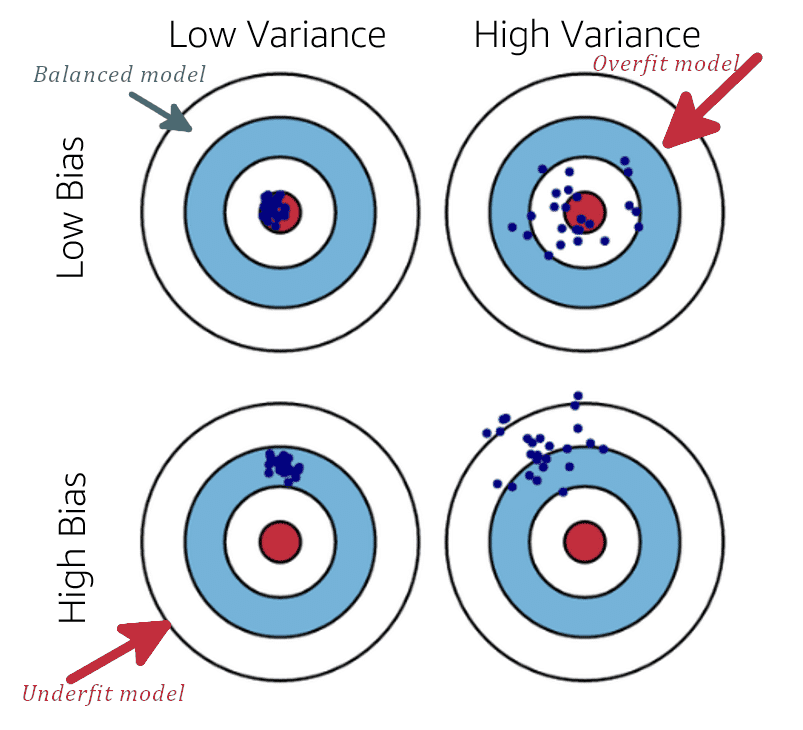

Ideally the model performance evaluated according to bias and variance:

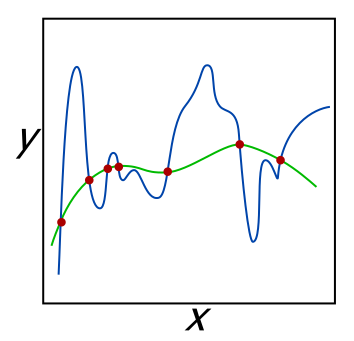

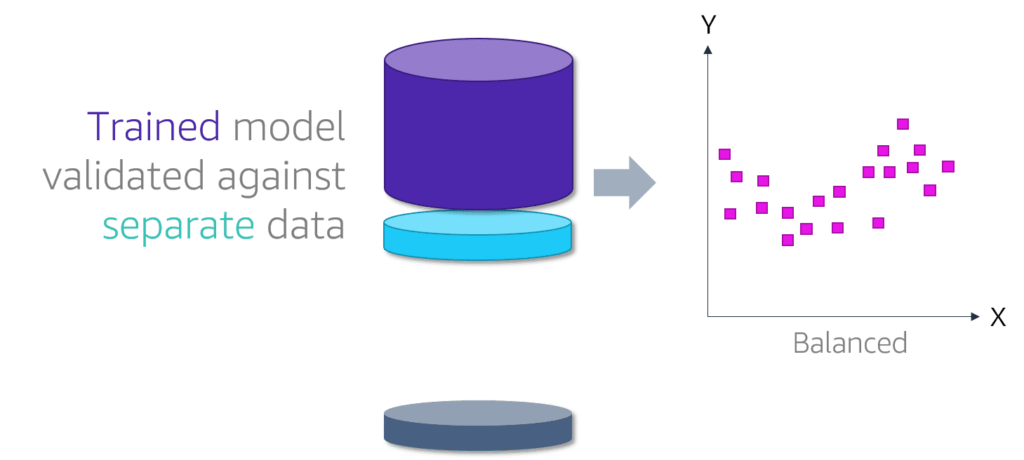

Model Fit

- Underfit: Perform poorly on Training Data (not enough data) <- add complexity, increase features, increase n-gram size, decrease regulation rules.

- Overfit: Performs well on Training data and poorly in Evaluation data, memorizes and can’t generalize <- Regularization: L1/L2 add a penalty term to the loss function, reduce features:= PCA, decrease number of numeric attributes, decrease regulation rules

- Balanced: neither overfit nor underfit

- Imbalanced: Categorial data distribution inconsistent, use techniques:

- Synthetic Minority Oversampling Technique SMOTE oversampling: creates new observations of the underrepresented class by interpolating between the minority class instances. with k-nearest minority class neighbor, data augmentation

- Random oversampling: copies of some of the minority class observations (randomly)

- Generative Adversarial Sampling GAS: Generates unique observations that more closely resemble the real minority observations without being so similar

- Edited Near Neighbor Undersampling : remove observations from the majority class

- Adaptive Synthetic Sampling (ADASYN): develop more synthetic samples for minority class samples that are more difficult to learn (close to decision boundary)

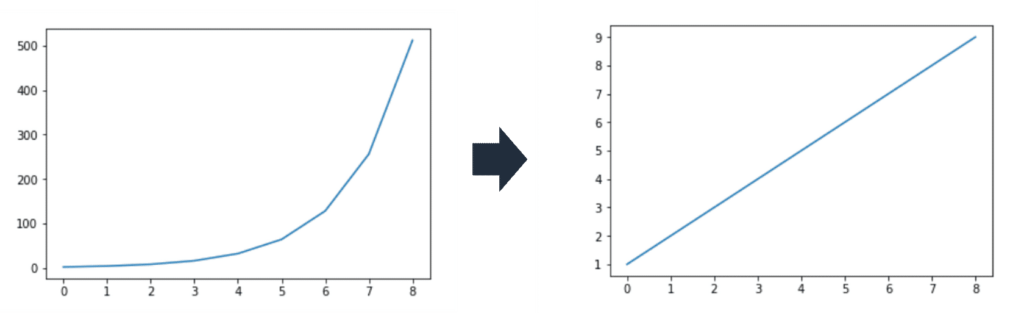

- Skewed: regression data distribution inconsistent, use log or power transform on the right-scewed or the left-scewed data

Model Bias and Variance

Bias is how far are projected data targets are to actual target value,

Low Bias, Low Variance:

The model is balanced, ideal spot where the model is performing well in training and test models, the target values are close to real

High Bias:

if Variance is low, then it is Underfitted, overly simple, don’t perform well on training data nor test data, increase features and datasets, decrease regularization, if variance is high too, then it is unbalanced and needs to be re-evaluated

High Variance:

if Low Bias, then it is Overfitted, the model overly complex, performs poorly on test data although values are close to real targets, reduce features, increase regularization

Bias-variance tradeoff

Identify the complexity of the training model by measuring the Bias vs Variance and tradeoff to settle the model.

Transfer Learning

The model is trained on a large dataset, fine tuned to work on small task specific dataset. improves performance and reduces training time, common algorithms ResNet and V4.

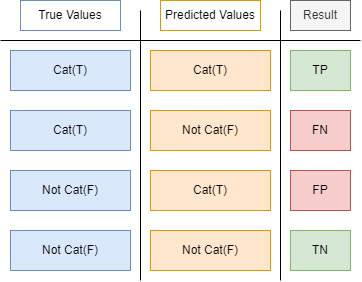

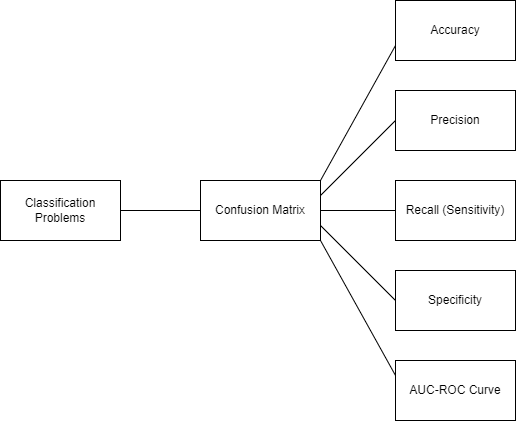

Classifications Problems

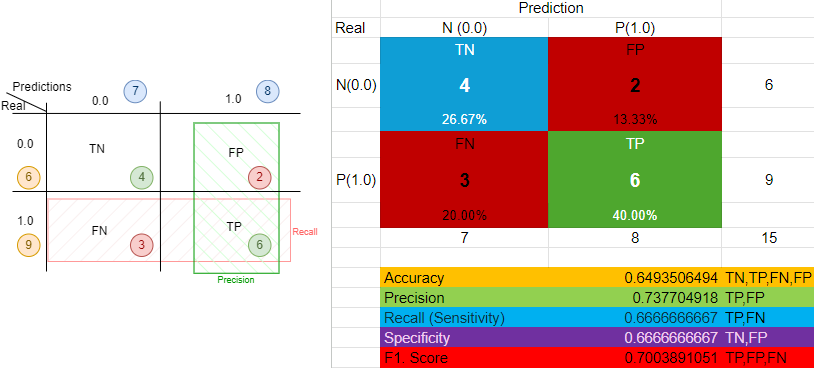

The confusion matrix

can help classify why and how a model gets something wrong.

The following metrics can be derived by the confusion metrix:

- Accuracy(score): Less effective lots of TNs, means dataset was easily detect the negatives, unreliable to detect the TPs. The percentage of correct estimates

- Precision: The percentage of correct predicted positives with respect false positives, Best when the cost of FP is high; email scams, investment forecasts, or credit card approvals, ignores the Negatives,

- Recall(Sensitivity): The percentage of correct predicted positives with respect to false negatives. taking into account the false negatives, Used when the cost of FN is high, such as results of medical cancer or tumor exams

- (Specificity): The percentage of correct predicted negatives with respect to false positives. Used when the cost of FP is high, similar to precision but take into account the TN, such as results of elimination voters, or product discontinuation

- F1.Score: Model predictions accuracy, Quantify Precision and Recall in one number, Used for class imbalance (equal number of samples for each class) but want to preserve the equality between precision and recall, binary/multi-class classifications, 1 means all predictions are accurate

Accuracy: All estimates are matched (equally) |

Precision: All Postive Estimates are correct, None is False |

Recall: All Postive Estimates are correct, No False negative estimates |

Specificity: All Negative estimates are correct |

F1 Score: All positive estimates are matched (with impact of All false FP & FN) |

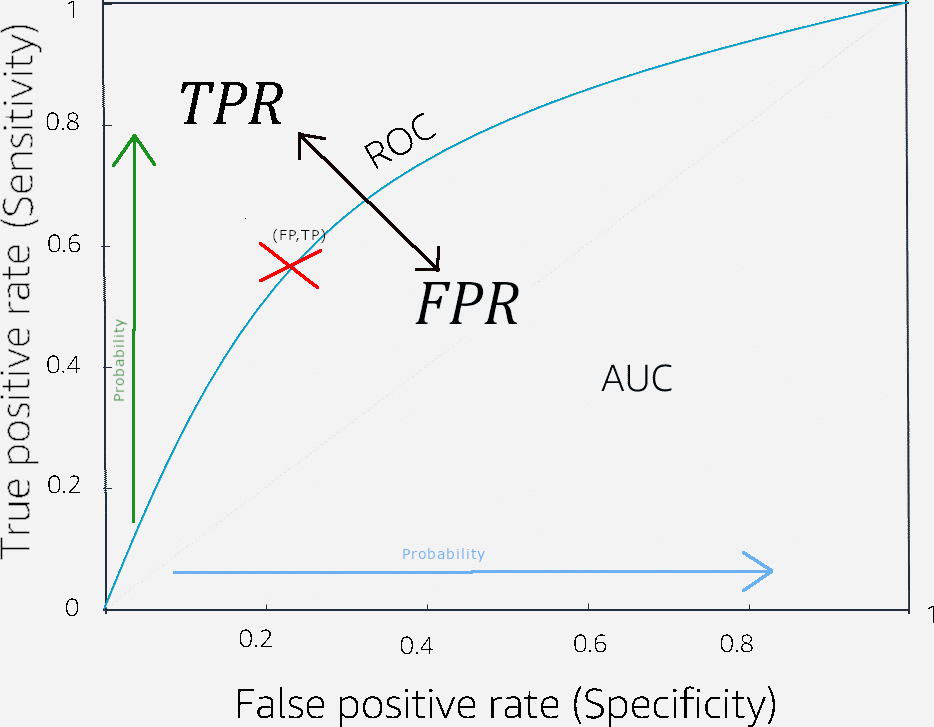

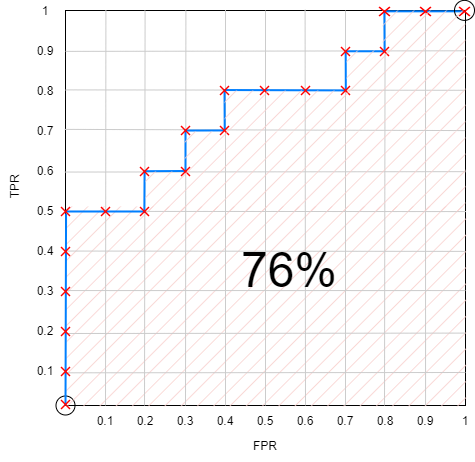

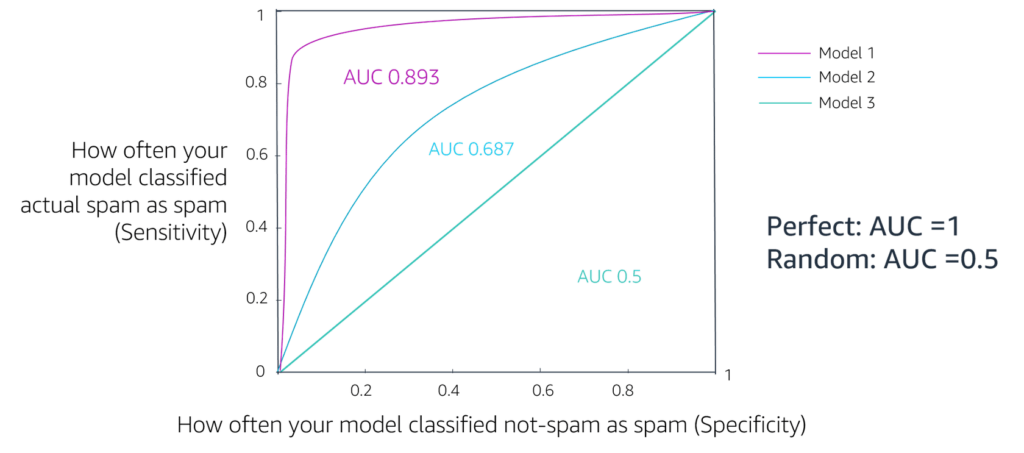

AUC-ROC Curve

AUC: Area Under Curve; logistic regression metric for supervised models, the degree or measure of separability, provides an aggregate measure (FPR) of performance across all possible classification thresholds. Best for data with mostly True Negatives, so it reduces their impact,

AUC is as the probability that the model ranks a random positive example more highly than a random negative example.

ROC: Receiver Operator Curve,(Recall) the probability curve,

AUC-ROC curve: show what the curve for true positive vs false positive looks like at various thresholds, a threshold is the cut-off probability which determines if the above this threshold the probability is determined to be true otherwise false. the threshold is determined as the point where the curve turns toward FPR (the knee of the curve)

AUC (PR) False Positive Rate (1 – specificity) curve: FP vs TN

*Used when dataset imbalanced skewed to the TN side

ROC True Positive Rate curve (Recall-Sensitivity): TP vs FN

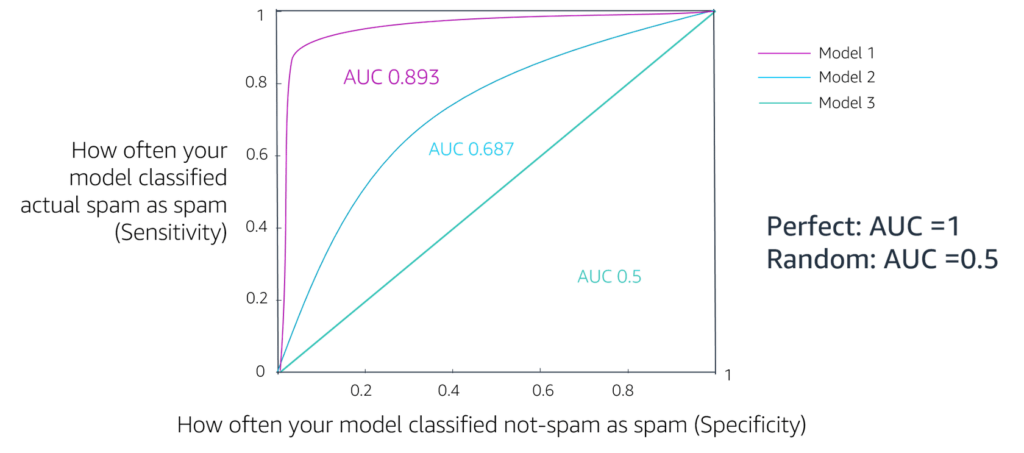

Ideally, the curve should be aligned mostly with TPR (y-axis), in the Emails example, Emails are ranked by the classifier’s score. (% of emails were classified correctly spam vs. not spam), based on the business case, plot the actual emails on the map and tune the model to produce the curve that meet business needs

Model 1: Model improved AUC 0.893 <- means the FPR is low majority of points

Model 2: Model at AUC 0.687 <- means FPR is slightly below

Model 3: Model at AUC 0.500 <- means the FPR is in the middle, the model accuracy is 50/50

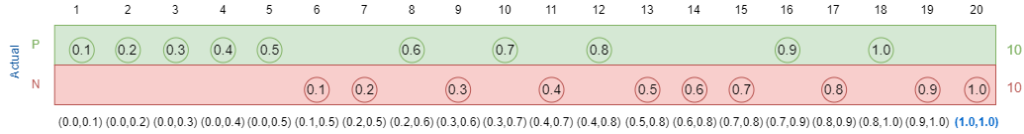

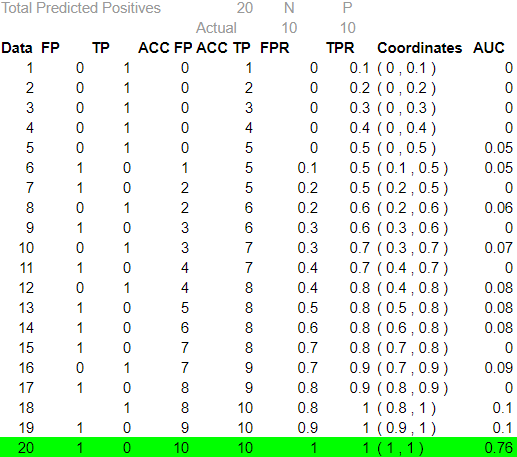

AUC Calculation steps

Step 1: Capture all the positive predictions of the model (example 20 positive predictions both TP and FP)

Step 2: Calculate TP and FP Rates using this table

- Calculate the hit and miss labels for FP and TP (0 or 1)

- Calculate the Accumulated FP and TP respectively

- Calculate the FPR and TPR ratio from Accumulated FP and TP

The sum of this computed AUC is the AUC in this example 76%.

The sum of this computed AUC is the AUC in this example 76%.

Step 4: Find the coordinates (FPR, TPR) and plot them on the grid

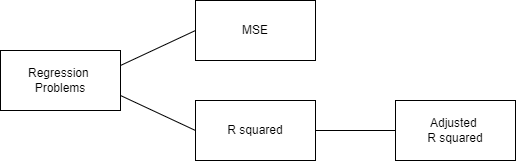

Regression Problems

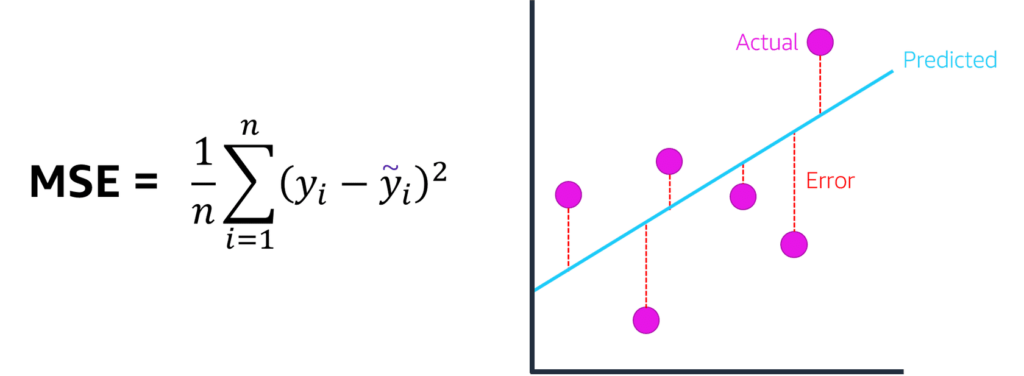

MSE Mean Square Error

Commonly used to measure Regression models, for continuous variables (datapoints incremental)

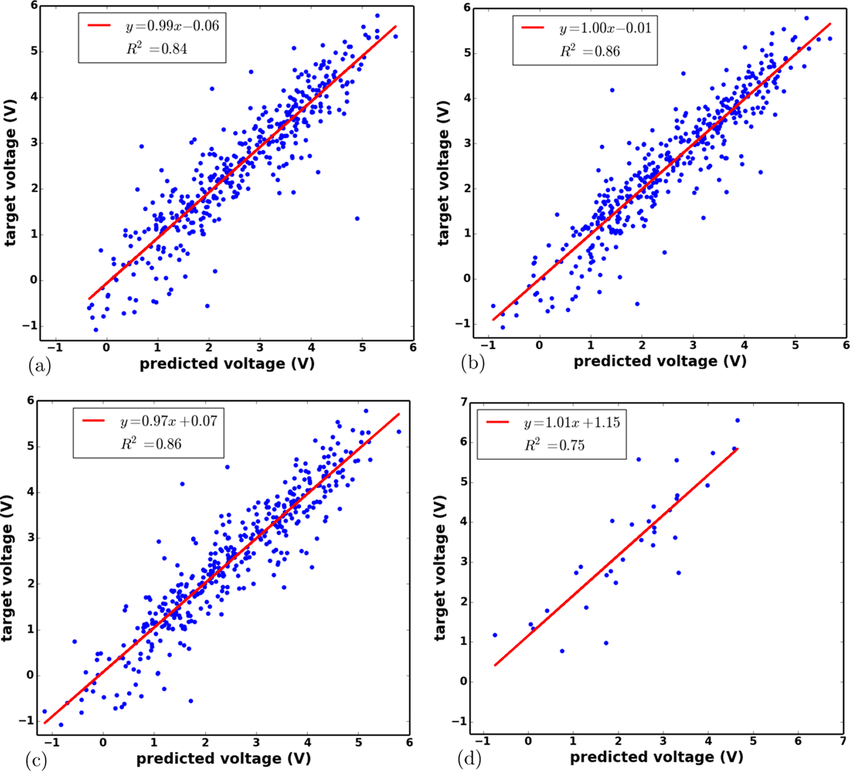

R2 (R squared)

Explains the fraction of variance accounted for by the model, adding more variables increases R, which indicates overfitting, used with regression continuous variables, use Adjusted R2 to address the problem

SSE:=Sum of Squared Error

Adjusted R2

The threshold for a good R squared depends on the the type of business.

Where:

D: Number of data points

V: Number of variables

Random Index

Unsupervised regression metric.

Regularizations

A set of methods for reducing overfitting in machine learning models. L1 regularization is also known as lasso regression, and L2 regularization is also known as ridge regression. L1 regularization adds the absolute value of the coefficient as a penalty term. L2 regularization adds the squared magnitude X2 of the coefficient as a penalty term.

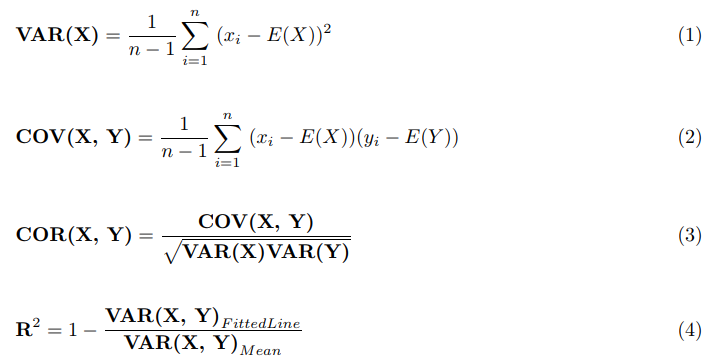

Variance

Statistical measure to measure the linear relationships between variables; reveals how two variables change together

Covariance

Statistical measure to measure the linear relationships between variables; reveals how two variables change together

Correlation

Statistical measure to measure how closely two variables are related to each other. uses the covariance to generate the unit independant measurement

E(x) is the expected value of x on the graph

Pearson correlation coefficient (PCC)

Statistical measure, measures the linear correlation between two sets of data, It is the ratio between the covariance of two variables and the product of their standard deviations; the threshold is +/- 0.5

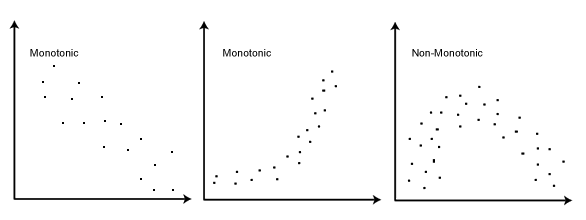

Spearsman correlation coefficient (SCC)

Statistical measure, is the nonparametric version of the Pearson product-moment correlation. Spearman’s correlation coefficient, (ρ, also signified by rs) measures the strength and direction of association between two ranked variables.

Polychoric correlation coefficient (PCC)

Statistical measure, measures agreement between multiple variables for ordinal variables (sometimes called “ordered-category” data).

Ordinary Least Squares Regression (OLSR):

A generalized linear modeling technique. It is used for predicting all unknown parameters involved in a linear regression model using one or more dependent parameters, the goal of which is to minimize the sum of the squares of the difference of the observed variables and the explanatory variables.

Data Optimization Techniques

Local Outlier Factor (LOF):

Discover outliers data points before applying dataset to the algorithm

Least-Angel Regression (LARS):

Regression Technique that predicts a dependent variable using one or more independent variables.

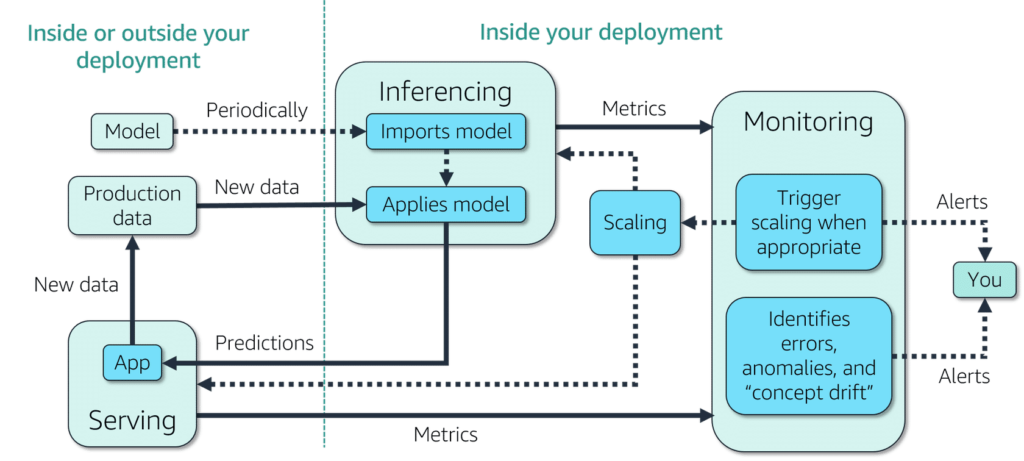

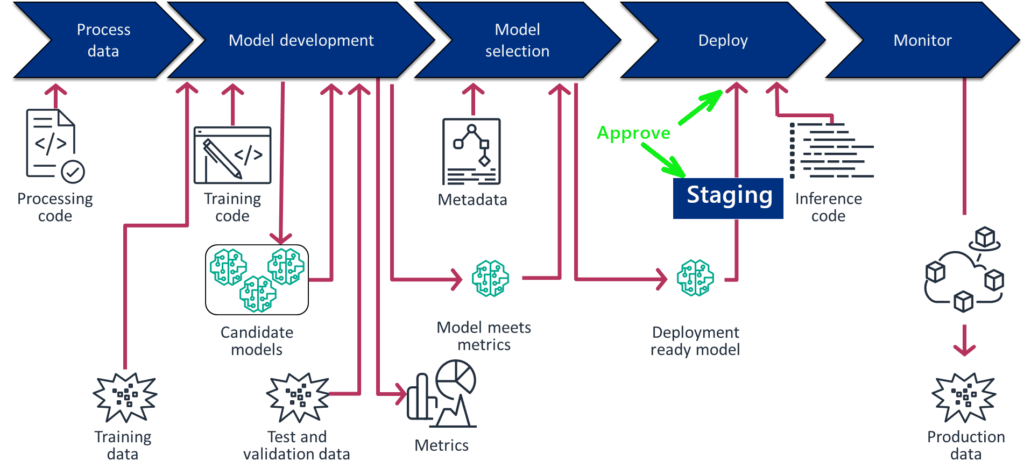

Model Deployment

The integration of the model and its resources into a production environment. so that it can be used to create predictions.

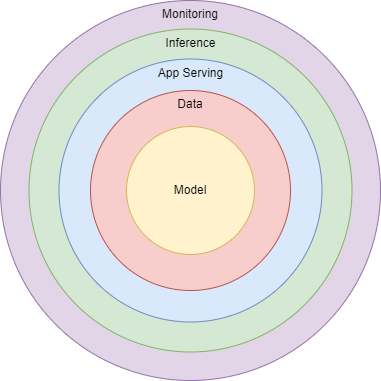

Layers:

- Model Layer: The structure of data, the algorithm, and framework

- Data Layer: Actual data(metrics) collected to train, evaluate, and test

- Serving (App) Layer: computing power to serve and custodian of the data

- Inference Layer: updates model and data to generate predictions

- Monitoring: collects metrics from App Serving layer and inference layer to alert, trigger scaling, report errors, or anomalies (concept drift)

Concepts & Components:

- Concept Drift: how the model accuracy gradually degrades over time and requires retraining

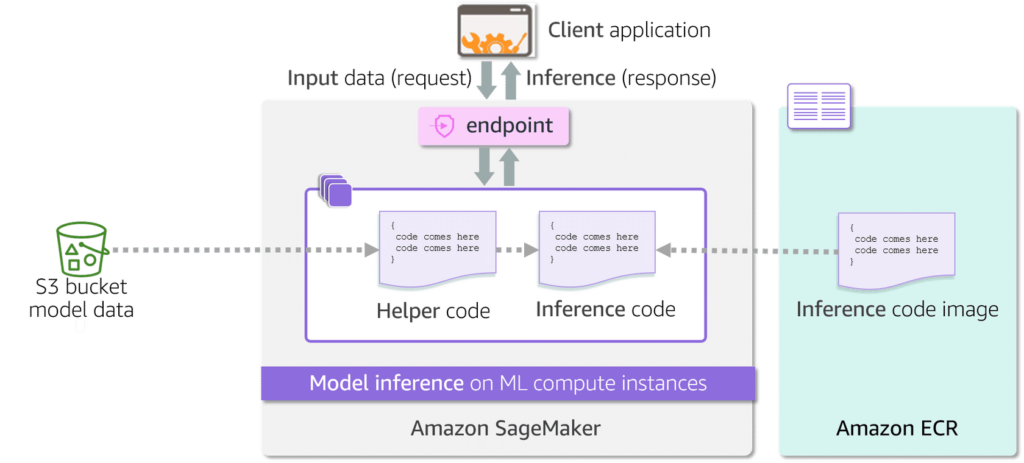

- HTTP Endpoint: The model hosting service by SageMaker

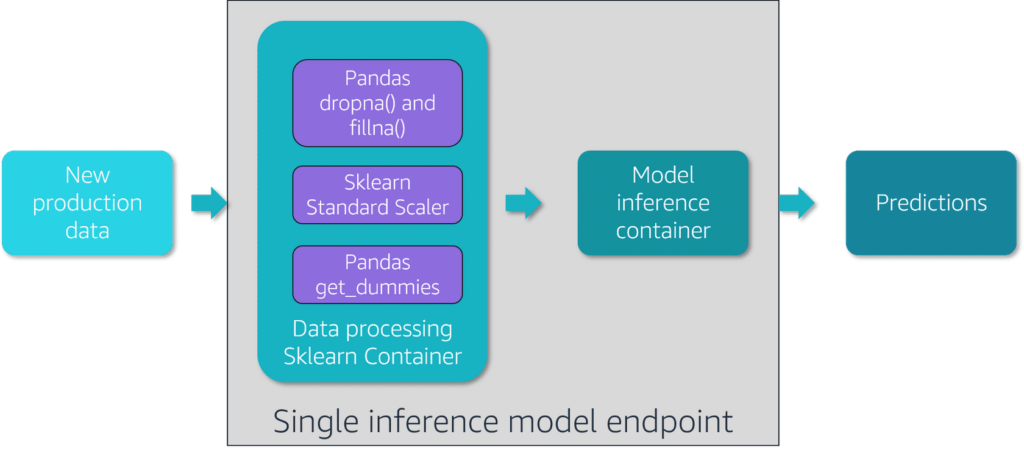

- Training Processing Jobs: Python SDK (scikit-learn SKLearnProcessor | Spark PySparkProcessor]

- Target Leakage: The predictors include data that will not be available at the time you make predictions , such as time sensitive predictions, use R2 and AUC-ROC

Deployment and Operational Techniques

- Online Learning: training the model incrementally by inferring data observations as individual observations or mini-batches

- Incremental Learning: use base model to extend with new data

- Transfer Learning: start with off-the-shelf trained model and then apply closely aligned observations

- Out-of-core Learning: train huge loaded datasets, and continuously add more datasets

Training models best practices

- Dataset: Clean and representative

- Data Splitting: use proper technique to avoid overfitting

- Models: Experiment with multiple models

- Monitor: Constant monitor the training models

- Evaluation: on realistic data

- Iterate and improve

AWS AI ML Stack

Amazon Rekognition

Analyzes Video and Images, Deep Learning for image classification, object detection, text in images, facial recognition, sentiment (Natural Language Processing (NLP), and public safety.

Uses Bounding boxes to capture objects algorithms such as SSD, R-CNN, Faster R-CNN, and YOLO

Use Semantic Segmentation to isolate or include a pixel into an object, useful for medical to identify cancer cells or tumor tissues.

Sources:

- Images: file or byte-coded

- Video: file or Kinesis video stream, asynchronous labeling to SNS topic or Kinesis Data Stream.

Amazon Textract

Extract intelligence from documents such as financial reports, medical records, tax forms, submission applications, insurance claims, etc. Beyond simple Optical Character Recognition (OCR)

Textract allows creation of workflows, no Analysis or semantics or classifications,

Use Cases:

- Document search indexes

- Natural Language Processing (NLP) cases extract words, lines, and tables

- Input PDF, JPG, multiple sizes

- Detect Document Hierarchy

- Synchronous (file or Byte Array) or Asynchronous (start***) return key-value pair or SNS topic(Async)

- Integrates with Amazon Augmented AI (A2I)

Amazon Augmented AI (A2I)

Get a secondary human review for low-confidence predictions or auditing a model performance. by defining a work team, use UI templates for instruction and finally inference by human — worker task template, work team can be [ public:= Mecahnical Turk | private := staff | 3rd Party external vendor ]

Use Cases:

- Integrates with Amazon Rekognition, Textract

- Custom models

Amazon Transcribe

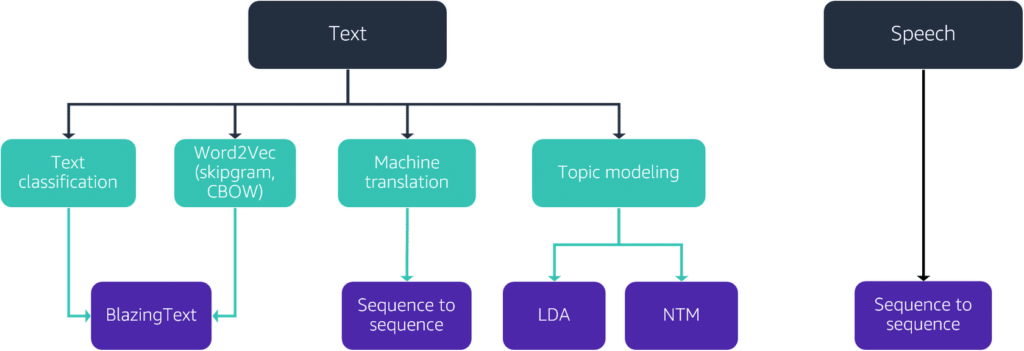

Convert human speech waveforms into text (Automatic Speech Recognition (ASR)), uses neural networks models seq2seq

Use Cases:

- Stream [HTTP/2 | WebSockets] and Batch mode [S3]

- Multi language support, Mixed language conversations (LanguageOptions), Auto detect

- Job Queuing built-in

- Custom vocabulary and filtering

- Automatic Content reduction or redact, Personal Identifiable Information (PII)

- Speaker Identification

- Amazon Transcribe Medical is medical domain specific ASR

Amazon Translate

Translates Text from various languages to another.

Use Cases:

- Stream [HTTP/2 | WebSockets] and Batch mode [S3]

- Multi language support, Mixed language conversations (LanguageOptions), Auto detect

- Job Queuing built-in

- Custom vocabulary and filtering

- Automatic Content reduction or redact, Personal Identifiable Information (PII)

- Speaker Identification

- Amazon Transcribe Medical is medical domain specific ASR

- Sync and Async(Batch processing) APIs

- Non Customizable built-in training models allows Custom Terminologies (selected languages)

Amazon Polly

Convert text to speech (TTS) use text or Speech Synthetic Markup Language (SSML). by stringing basic speech units phonemes into natural sounding voice (vocoder) using Deep Learning models neural TTS (NTTS) uses sequence to sequence, The SSML <prosody> tag define how to render voices using pitch, volume, rate of speech,

Use Cases:

- Chatbots voicing

- Automated interactive Answering machines

Amazon Lex

Conversational interactive Chat interface, language support, by converting utterance (what the user actually wants) into the intent (what is the equivalent command) configurable with slots and slot types, with fallback intent to retrain.

Use Cases:

- Chatbots

- Customer support

- Online Troubleshooting

- Customer event management (booking appointments)

- AIOps

Amazon Kendra

Search and mine unstructured data, Accepting search queries and return intelligent facts answers; use the Deep Learning algorithms, works by indexing, document organization, external data sources such MS SharePoint, S3, Confluence, Salesforce, Service Now

Use Cases:

- Chatbots

- Customer support

- Online Troubleshooting

- Customer event management (booking appointments)

- AIOps

Amazon Personalize

Personalize user experience, recommendations which relies on [ User Data, Item Data, Interaction Data ] to build insights. methods like clustering, content-based filtering, or recently collaborative filtering by matrix factorization to decompose a very large sparse matrix into smaller matrices to extract hidden or latent vectors, without considerations to user purchase history.

Recurrent Neural Network (RNN) retrain using customer purchase history, Amazon extension HRNN-Metadata, and also the MultiArmed Bandits (MABs) which uses exploration-exploitation trade-off,

Use Cases Solutions:

- User Personalization Recipes [ user, popularity, HRNN-metadata ]

- Ranking-Based Recipes

- Related Items Recipes

Amazon Forecast

Provides forecasts based on historic data, using Deep Learning algorithms, using algorithms such as

- AutoRegressive Integrated Moving Average (ARIMA) based on time series differential, requires small datasets < 100, or

- Prophit fits time series into data by detecting trends at different time intervals works against data irregularities and missing data (seasonal data), Facebook – Neural Prophit adds neural network.

- Amazon DeepAR[+] uses long short-term memory LSTM based on probabilistic sampling techniques splitting the time series into windows(context-length) to predict the forecast horizon. foreword looking.

- Exponential smoothing ETS: statistical algorithm, weighted average of prior features with exponential weight

- The convolutional neural network quantile regression CNN-R: uses causal convolutional networks, doesn’t require future data for the forecast horizon.

- Nonparametric time series (NPTS): used for seasonal data, sparse, or bursty data with a lot of intermitted values

Use Cases:

- Allows addition of weather data

- Data splitting is not possible, use backtesting on historic data (Ground Truth)

- Measured by RMSE,(to amplify outliers), or Weighted Absolute Percentage Error (WAPE) also known as Mean Absolute Percentage Error (MAPE) or median forecasting

- Quantile based probabilistic, ex. P10:= 10% of real values are less than prediction, for over stocking use higher P.

- Weighted Quantile Loss (wQL) to penalize the model for underfitting versus overfitting to show the over vs under predicting

Amazon Comprehend

Extract insights from text documents consist of Entities, Key phrases, PII, Language, Sentiment [ Positive | Negative | mixed | neutral ], Syntax, Obfuscates PII information, Classifies labels

Use Cases:

- Train custom models

- Custom document Classification: labeling

- Custom Entity detection: new entities

- Document topic modeling (LDA) : frequent words form topics

- Character encoding UTF-8, < 5KB, 25 docs/s (Batch 250 docs/s, < 1MB, total size < 5 GB)

Amazon CodeGuru

Provides intelligent code recommendation,

Use Cases:

- Reviewer:

- Detects potential code security vulnerabilities, offers suggestions in accordance to AWS best practices

- Amazon CodeGuru Security is a static application security testing (SAST)

- Code source: [AWS CodeCommit, S3, GitHub, BitBucket]

- Integrates with Application CI/CD

- Profiler: Profile code on AWS Lambda

Amazon Bedrock

offers a choice of high-performing foundation models (FMs), uses techniques such as fine-tuning and Retrieval Augmented Generation (RAG), and build agents that execute tasks using your enterprise systems and data sources. Amazon Bedrock Studio is a new SSO-enabled web interface that provides the easiest way for developers across an organization to experiment with large language models (LLMs) and other foundation models (FMs), collaborate on projects, and iterate on generative AI applications.Use Cases:

- Rapid Generative AI application development with Bedrock Studio

- Text Generation: Create new pieces of original content, such as blog posts, social media posts, and webpage copy.

- Virtual Assistance: Build assistants that understand user requests, automatically break down tasks, engage in dialogue to collect information, and take actions to fulfill the request

- Text/Image Search: Search and synthesize relevant information to answer questions and provide recommendations from a large corpus of text and image data.

- Text Summarization: Get concise summaries of long documents such as articles, reports, research papers, technical documentation, and even books to quickly and effectively extract important information.

- Image Generation: Quickly create realistic and visually appealing images for ad campaigns, websites, presentations, and more.

Amazon Q

Amazon Q generates code, tests, debugs, and has multistep planning and reasoning capabilities that can transform and implement new code generated from developer requests. Amazon Q also makes it easier for employees to get answers to questions across business data—such as company policies, product information, business results, code base, employees, and many other topics—by connecting to enterprise data repositories to summarize the data logically, analyze trends, and engage in dialogue about the data.

Use Cases:

- Amazon Q Business: Answer questions, provide summaries, generate content, and securely complete tasks based on data and information in your enterprise systems.

- Amazon Q Developer: Assist coding, testing, and upgrading applications, to diagnosing errors, performing security scanning and fixes, and optimizing AWS resources.

- Amazon Quicksight: Analytics dashboards

- Amazon Connect

- AWS SupplyChain

- Elastic Serach

- Kibana:

- Amazon Fraud Detector: mode ONLONE_FRAUD_INSIGHTS

Model Training and Tuning

Iterative process that can be performed many different times throughout this workflow, perform additional feature engineering and tune the model’s hyperparameters

ML Frameworks

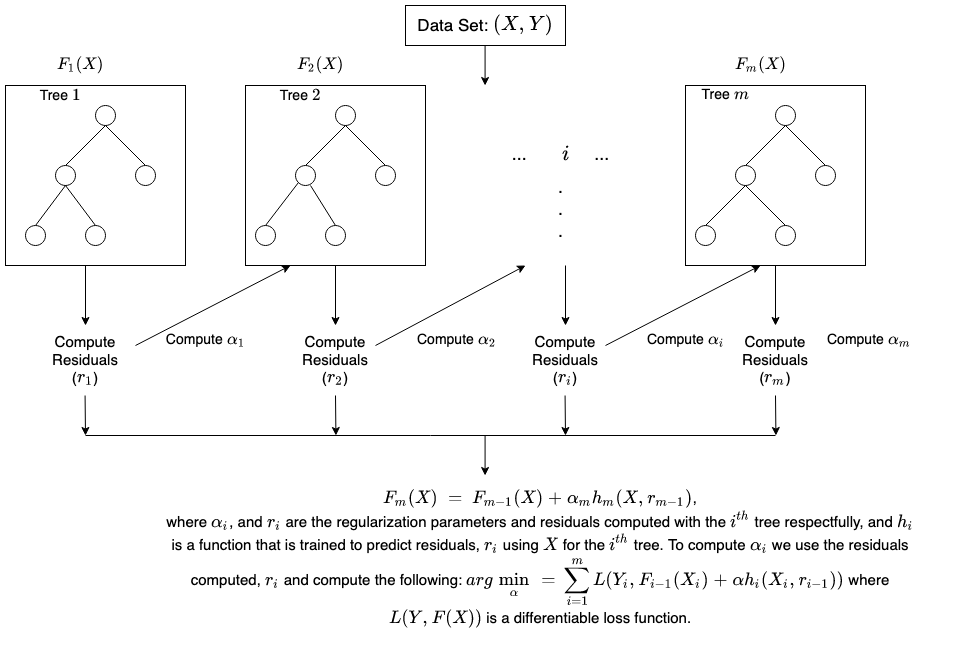

XGBoost:

eXtreme Gradient Boosting, uses parallel tree boosting (also known as GBDT, GBM), The weak learners are regression trees, and each regression tree maps an input data point to one of its leaves that contains a continuous score. XGBoost minimizes a regularized (L1 and L2) objective function that combines a convex loss function (based on the difference between the predicted and target outputs) and a penalty term for model complexity (in other words, the regression tree functions). The training proceeds iteratively, adding new trees that predict the residuals or errors of prior trees that are then combined with previous trees to make the final prediction. It’s called gradient boosting because it uses a gradient descent algorithm to minimize the loss when adding new models.ML Algorithms

Algorithms

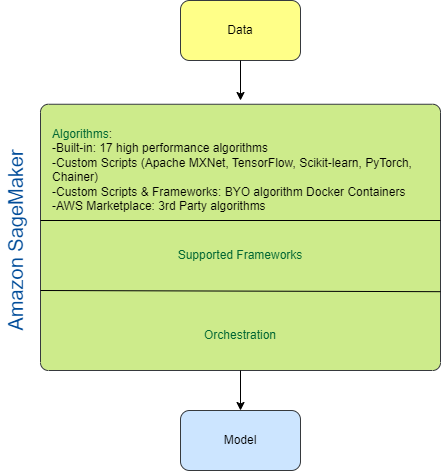

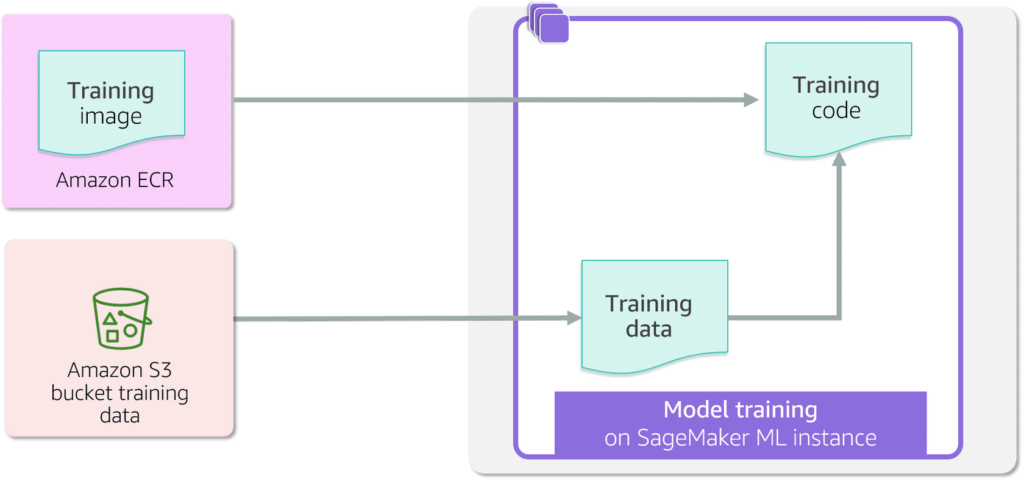

Use the algorithms suitable to the ML Problem, Amazon SageMaker built-in algorithms are pre-trained models in docker containers and highly customizable, it is also possible to develop a custom algorithm and use the pre-built docker images frameworks.

CatBoost:

open-source implementation of the Gradient Boosting Decision Tree (GBDT) algorithm. GBDT is a supervised learning algorithm that attempts to accurately predict a target variable by combining an ensemble of estimates from a set of simpler and weaker models.

Handles missing values by setting them to 0.

LightGBM:

GBDT is a supervised learning algorithm that attempts to accurately predict a target variable by combining an ensemble of estimates from a set of simpler and weaker models. LightGBM uses additional techniques to significantly improve the efficiency and scalability of conventional GBDT.

Linear Learner:

Linear models are supervised learning algorithms used for solving either classification or regression problems. For input, you give the model labeled examples (x, y). x is a high-dimensional vector and y is a numeric label. For binary classification problems, the label must be either 0 or 1. For multiclass classification problems, the labels must be from 0 to num_classes – 1. For regression problems, y is a real number. The algorithm learns a linear function, or, for classification problems, a linear threshold function, and maps a vector x to an approximation of the label y.

Linear regression:

predicting variable value based on another single variable, numeric value regression

Multivariate regression:

Similar to Linear regression but uses multiple variables to predict a variable value based on multiple variables, numeric value regression

Logistic Regression:

Predicting the probability of an outcome, event, or observation, Binary classification regression

KNN:

The k-nearest neighbors (KNN) algorithm is a non-parametric, supervised learning classifier, which uses proximity to make classifications or predictions about the grouping of an individual data point. Two methods of dimension reduction methods: random projection and the fast Johnson-Lindenstrauss transform. used to find items similarities for recommendation models.

XGBoost – eXtreme Gradient Boosting:

Implementation of the gradient boosted trees algorithm. Gradient boosting is a supervised learning algorithm that tries to accurately predict a target variable by combining multiple estimates from a set of simpler models. accept inferences in text/csv, text/libsvm, recordio-protobuf

Common XGBoost Hyperparameters:

numclass: The number of classes.

num_round: The number of rounds to run the training.

alpha: L1 regularization term on weights. Increasing this value makes models more conservative..

gamma: Minimum loss reduction required to make a further partition on a leaf node of the tree. The larger, the more conservative the algorithm is.

eta: Step size shrinkage used in updates to prevent overfitting. After each boosting step, you can directly get the weights of new features. The eta parameter actually shrinks the feature weights to make the boosting process more conservative.

base_score: The initial prediction score of all instances, global bias..

subsample: Subsample ratio of the training instance. Setting it to 0.5 means that XGBoost randomly collects half of the data instances to grow trees. This prevents overfitting.

Naïve Bayes:

Use principles of probability to perform classification tasks for text categorization problems. use maximum likelihood method

Decision Tree:

Follows a tree-like model of decisions and their possible consequences

Random Forest:

Combines the output of multiple decision trees to reach a single result

Factorization Machines:

The Factorization Machines algorithm is a general-purpose supervised learning algorithm that you can use for both classification and regression tasks. Used to capture interactions between features within high dimensional sparse datasets. For example, in a click prediction system, the Factorization Machines model can capture click rate patterns observed when ads from a certain ad-category are placed on pages from a certain page-category. Factorization machines are a good choice for tasks dealing with high dimensional sparse datasets, such as click prediction and item recommendation.

TabTransformer:

A novel deep tabular data modeling architecture for supervised learning. The TabTransformer architecture is built on self-attention-based Transformers. The Transformer layers transform the embeddings of categorical features into robust contextual embeddings to achieve higher prediction accuracy. The contextual embeddings learned from TabTransformer are highly robust against both missing and noisy data features, and provide better interpretability.

Principal Component Analysis (PCA):

Dimension reduction algorithm, The data is linearly transformed onto a new coordinate system such that the directions (principal components) capturing the largest variation in the data can be easily identified.

K-means clustering:

Partitioning a dataset into a pre-defined number of clusters. useful for tabular data, attempts to find discrete groupings within data, where members of a group are as similar as possible to one another and as different as possible from members of other groups

Linear Discriminant Analysis (LDA):

Finds a linear combination of features that characterizes or separates two or more classes of objects or events. Also known as normal discriminant analysis (NDA), or discriminant function analysis is a generalization of Fisher’s linear discriminant

Latent Dirichlet Allocation (LDA):

Similar to the clustering algorithm K-means, groups words and documents into a predefined number of clusters (i.e. topics). These topics can then be used to organize and search through documents.

BlazingText:

provides highly optimized implementations of the Word2vec and text classification algorithms. The Word2vec algorithm is useful for many downstream natural language processing (NLP) tasks, such as sentiment analysis, named entity recognition, machine translation, etc. Text classification is an important task for applications that perform web searches, information retrieval, ranking, and document classification.

Sequence to Sequence:

Seq2seq is a family of machine learning approaches used for natural language processing. Applications include language translation, image captioning, conversational models, and text summarization. Seq2seq uses sequence transformation: it turns one sequence into another sequence.

Neural Topic Model (NTM):

Organize a corpus of documents into topics that contain word groupings based on their statistical distribution.

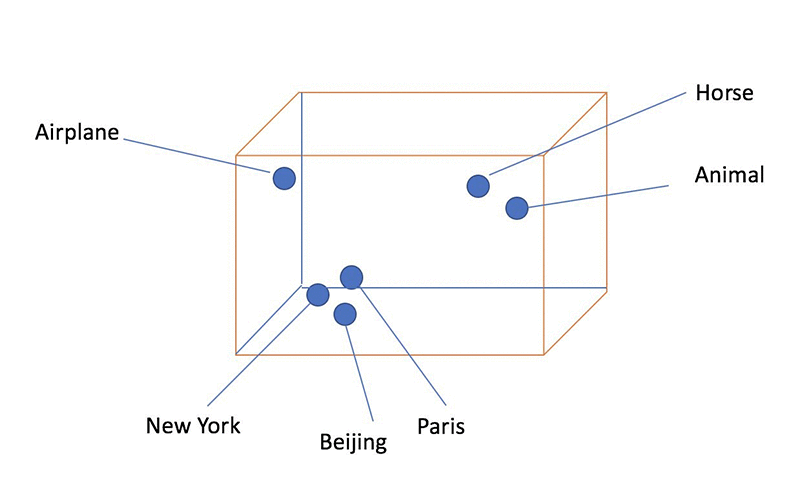

Object2Vec:

A Neural embedding algorithm generalizes Word2Vec. It can learn low-dimensional dense embeddings of high-dimensional objects, preserves the semantics of the relationship between pairs of objects, learn by compute nearest neighbors of objects

Text Classification – TensorFlow:

Supports transfer learning with many pretrained models from the TensorFlow Hub. Use transfer learning to fine-tune one of the available pretrained models on your own dataset, even if a large amount of text data is not available. The text classification algorithm takes a text string as input and outputs a probability for each of the class labels. Training datasets must be in CSV format.

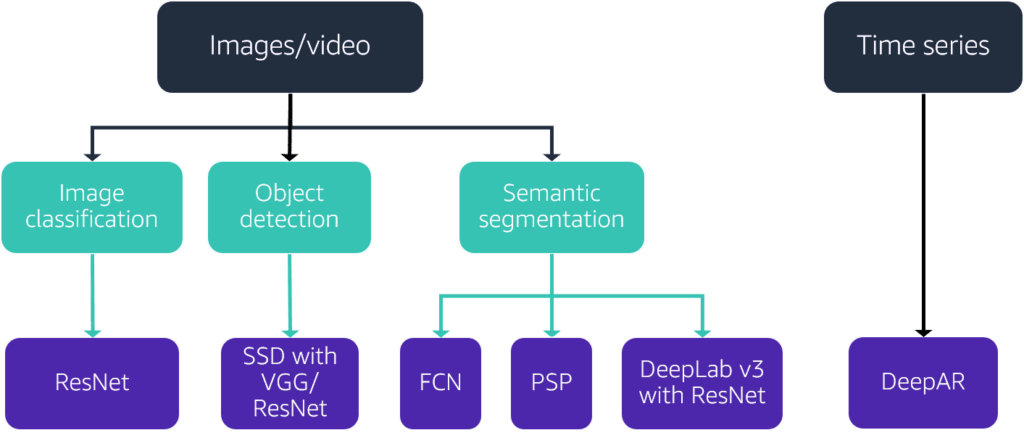

Residual Neural Network (ResNet):

The weight layers learn residual functions with reference to the layer inputs. used for Transfer Learning.

Single Shot MultiBox Detector (SSD with VGG):

A single-stage object detection method that discretizes the output space of bounding boxes into a set of default boxes over different aspect ratios and scales per feature map location.

Fully Convolutional Networks (FCN):

They employ solely locally connected layers, such as convolution, pooling and upsampling. Avoiding the use of dense layers means less parameters (making the networks faster to train). It also means an FCN can work for variable image sizes given all connections are local.

Fully Convolutional Networks:

Predict PS propensities based on the integration of residue-level and structure-level features.

DeepAR:

Forecasting scalar (one-dimensional) time series using recurrent neural networks (RNN). Autoregressive integrated moving average (ARIMA) or exponential smoothing (ETS), fit a single model to each individual time series. They then use that model to extrapolate the time series into the future.

Semantic Segmentation:

A deep learning algorithm that associates a label or category with every pixel in an image.

-

-

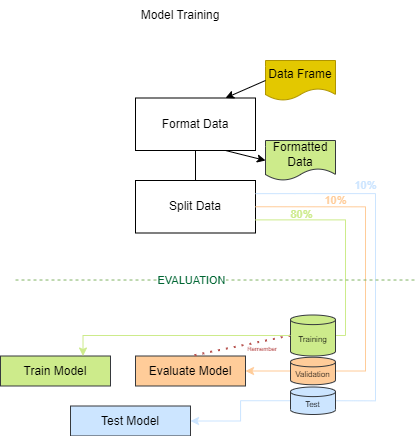

Formatting Data

CVS Formatting – Labels on left, No headers RecordIO format- protobuff Formatting Python -

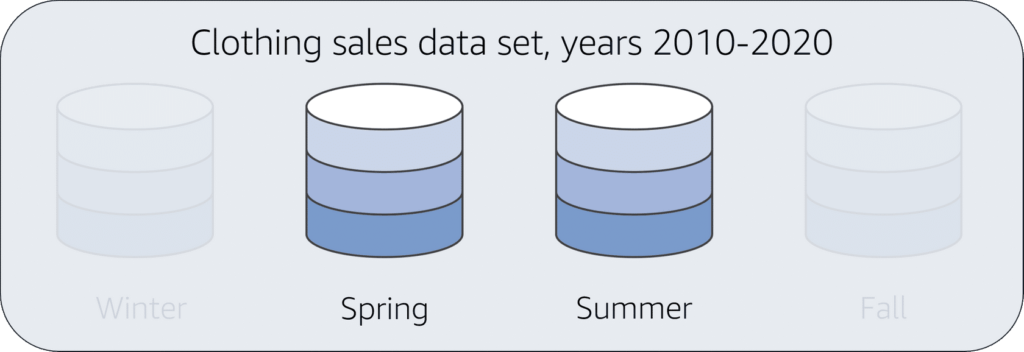

Data Splitting

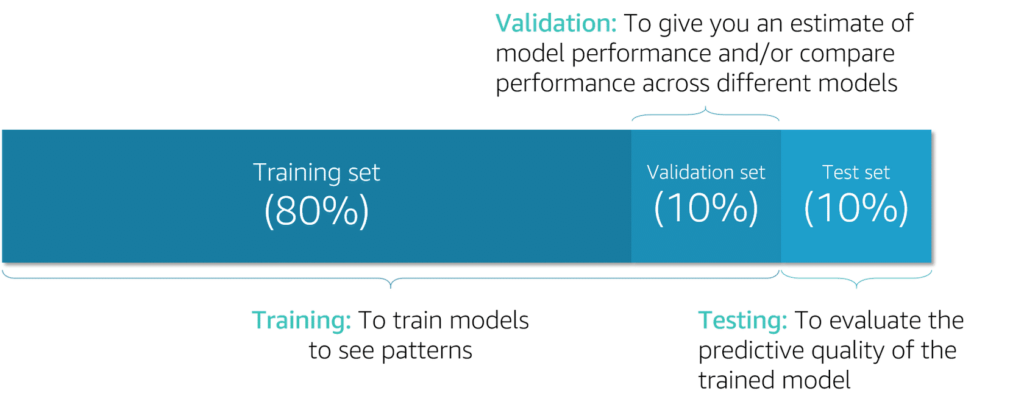

To generalize a training model, Split data into (1) Training to validate against (2) Evaluation data , and then apply against (3) Testing data, to avoid overfitting (learn model data very well), to avoid bias, randomize data, the methods to split:

-

Testing and Validation Techniques

Simple Hold-out validation:

Split data (Training:80%(Features + Labels), Evaluation:10%, Testing:10%(Features + Predict Labels)

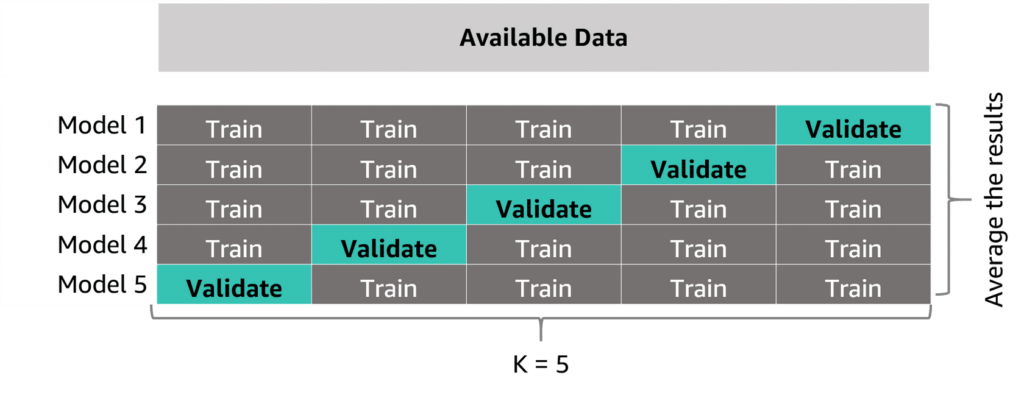

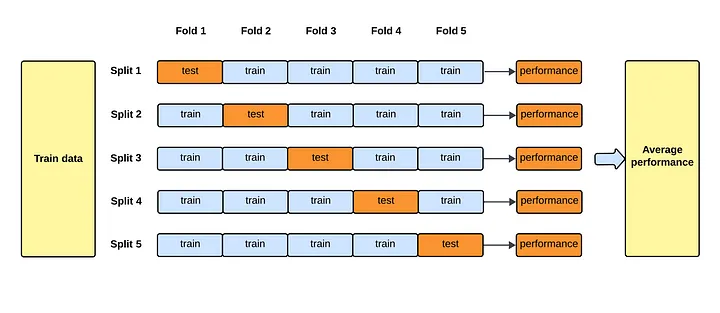

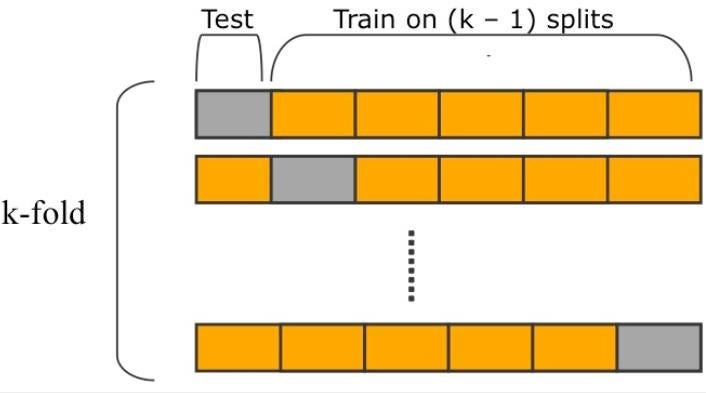

Cross validation:

Compare multiple models based on scoring, to estimate model performance on unseen data and select the hyperparameters by splitting the data into multiple folds (training, evaluation, and test)

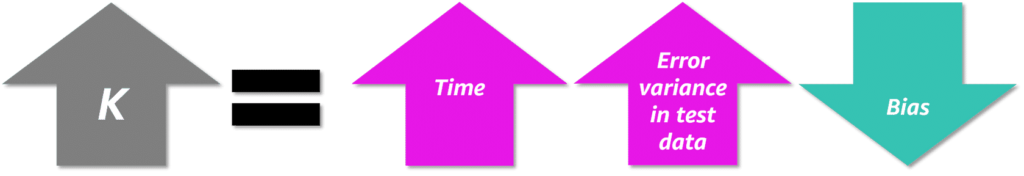

K-fold cross validation:

Randomly partition data into K segments, data outside the segment is Evaluation data, apply (k-1) times to the model and average the results. Smaller Ks more bias

Notes about splitting data:

- Data ordering: can lead to bias; Randomize data

- K-Size: smaller k size is more bias, use larger K size

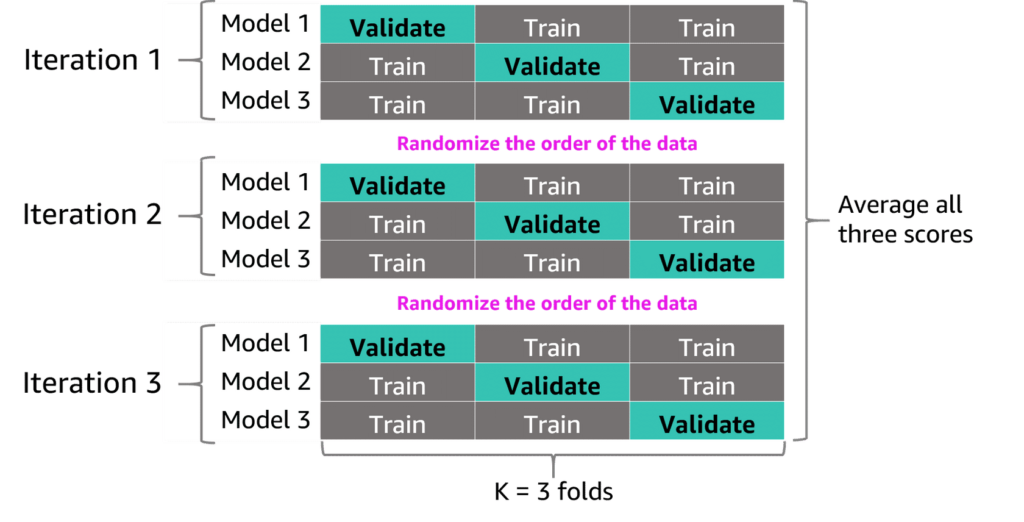

- K-fold with shuffling: apply k-fold with shuffle each iteration

Iterated k-Fold validation with shuffle

Shuffle data between K-Folds, then average the results

Time Series Cross Validation

Uses forward chaining, ensure model is not overtrained and generalized.

Leave One Out Cross Validation (LOOCV)

Used to estimate the performance of machine learning algorithms when they are used to make predictions on data not used to train the model. uses k-fold but keeps one fold out each iteration for testing.

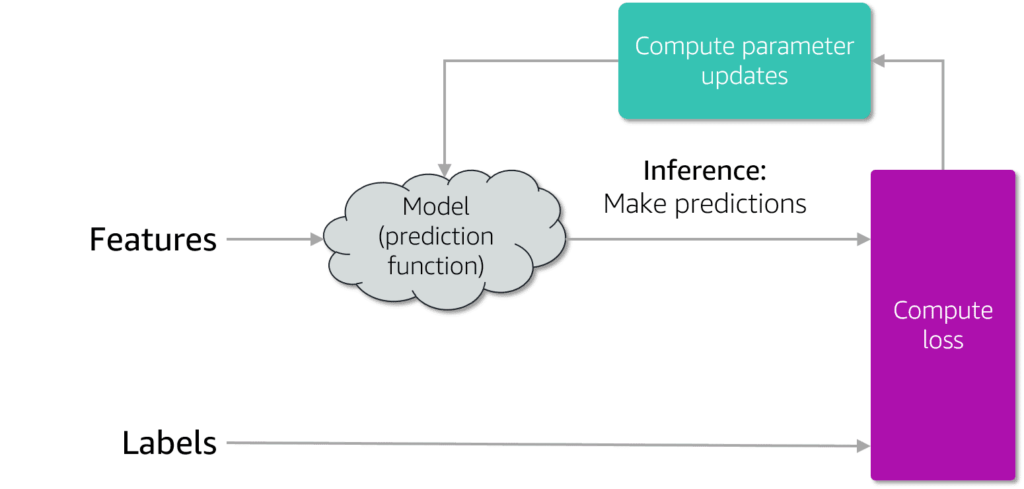

Train The Model

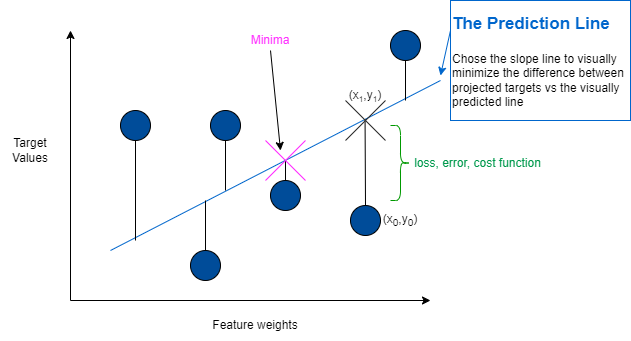

- During training process iterations, the algorithm calculates the parameters (or weights) based on previous iteration so that the predicted values as close as the observed (true) value, the iteration continues until the error reached the target or the defined number of iterations has been reached.

The model converge if the errors decreases over iterations (successful) otherwise the model needs to be re-evaluated.

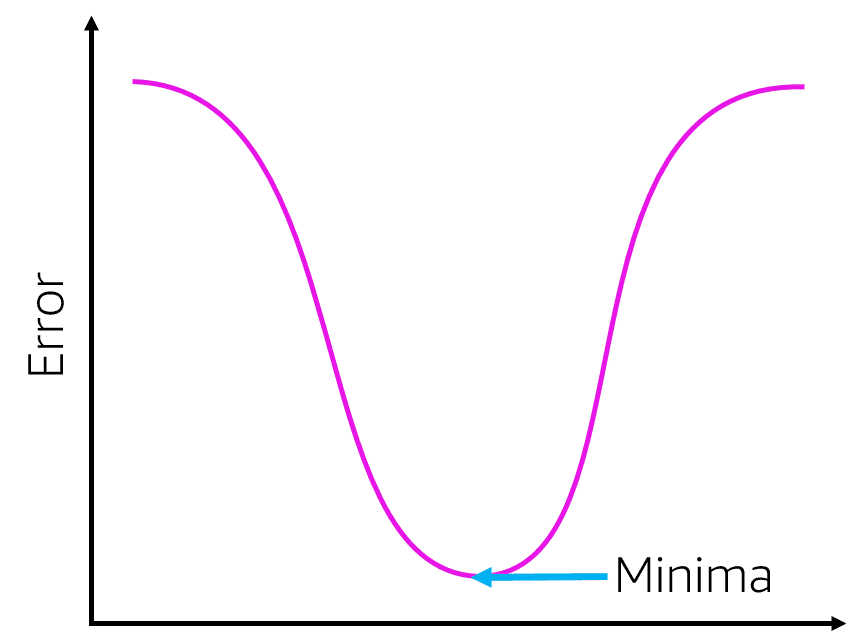

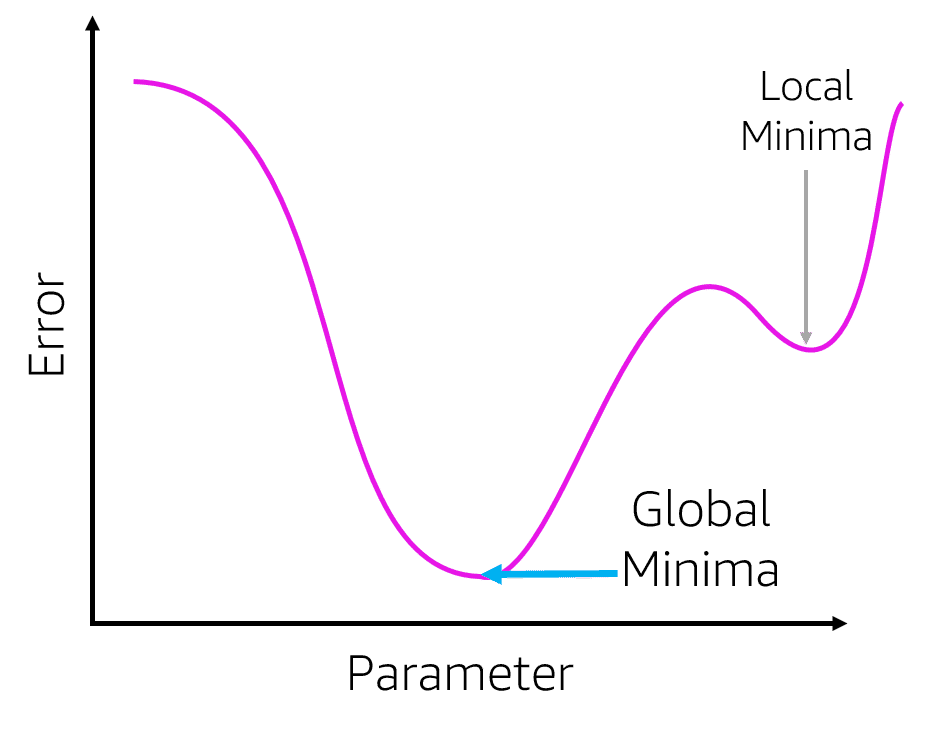

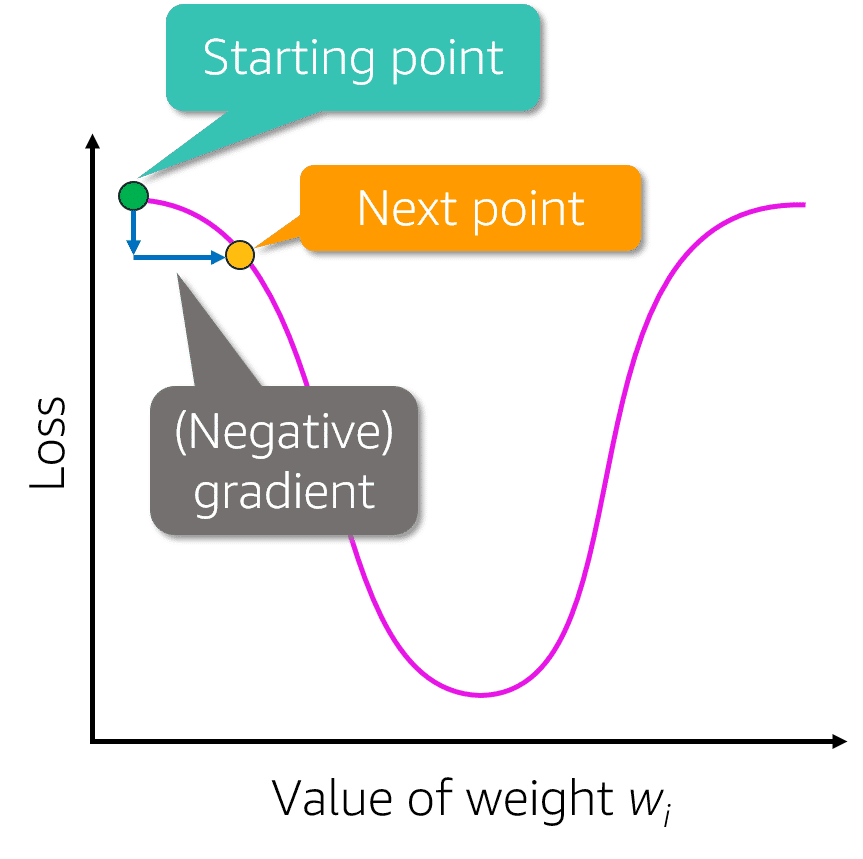

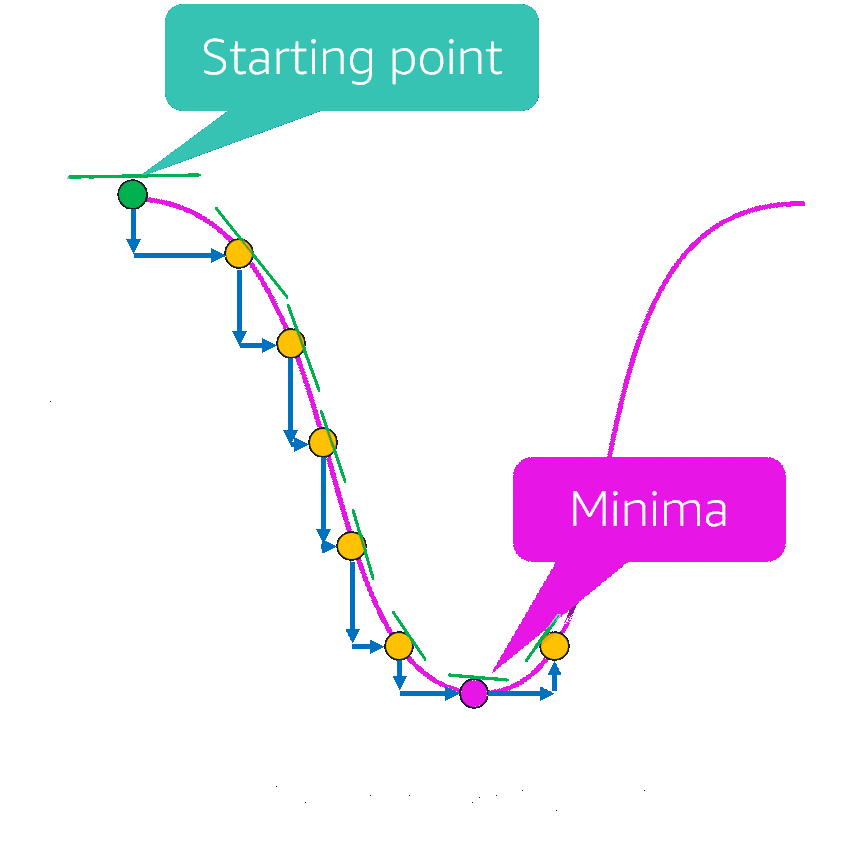

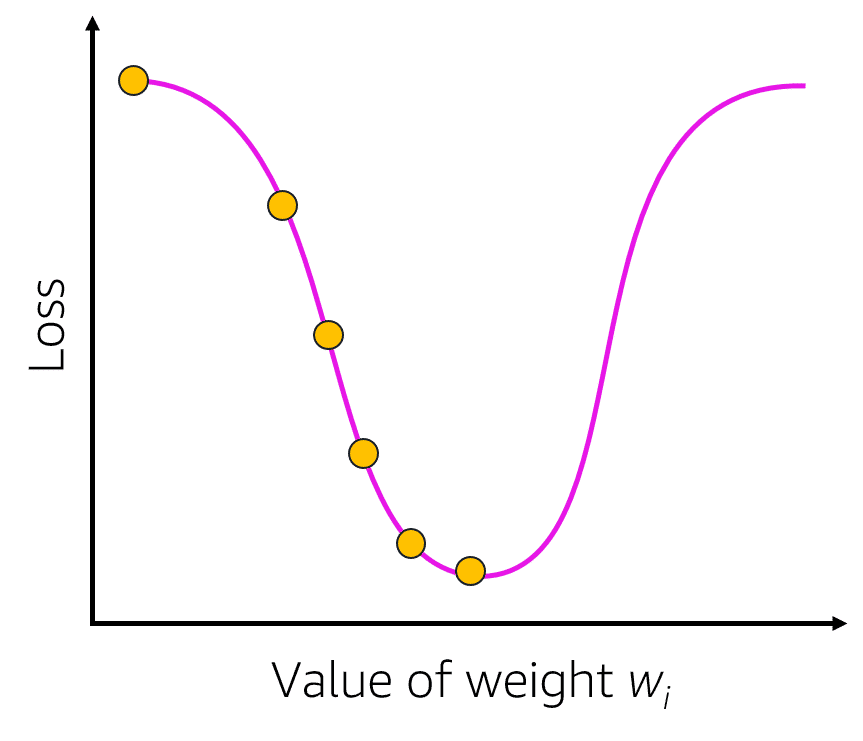

Computer calculates the parameters using the loss function (objective function) and optimization techniques measures the error given a set of weights, the deviation from actual value is the loss function.- Minima:=the point with least amount of error, calculated by traversing the well (trough)

- Global minima:=the lowest trough

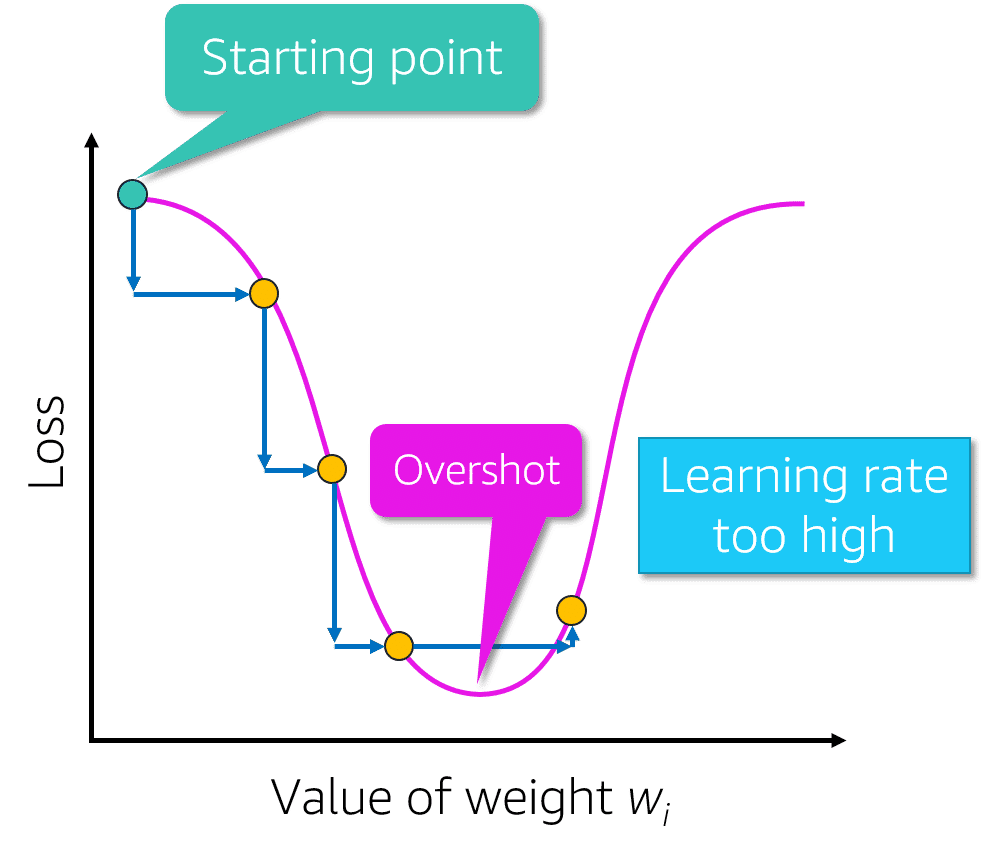

- Gradient descent:= technique used to find the minima, calculate the first slope (gradient) of the curve; works on small datasets, slow, dataset must fit in memory, possible fail to reach global minima; limitations can be optimized with stochastic gradient descent algorithm

- Learning rate:=A hyperparameter of the model, is the size of the step (the delta between two weights) large steps causes overshoot (miss the trough(Minima)), small steps moves slowly may fail to reach the Minima, Optimized by reducing the size as it gets closer to the Minima

epoch:= the iteration to train the model - Hyperparameter:=A paremeter that is external to the model, set by human and cannot be estimated ex. learning weight, search for best hyeprparameter is called optimization

- Collinearity:= when the prediction tightly aligned with one parameter, Use PCA and drop low variances

- [Discrete | Continuous] objectives:= weather the objective is specific distinct value (classification) [f1,recal,precision] or continuous value (regression) [cross-entropy, mse, rmse]

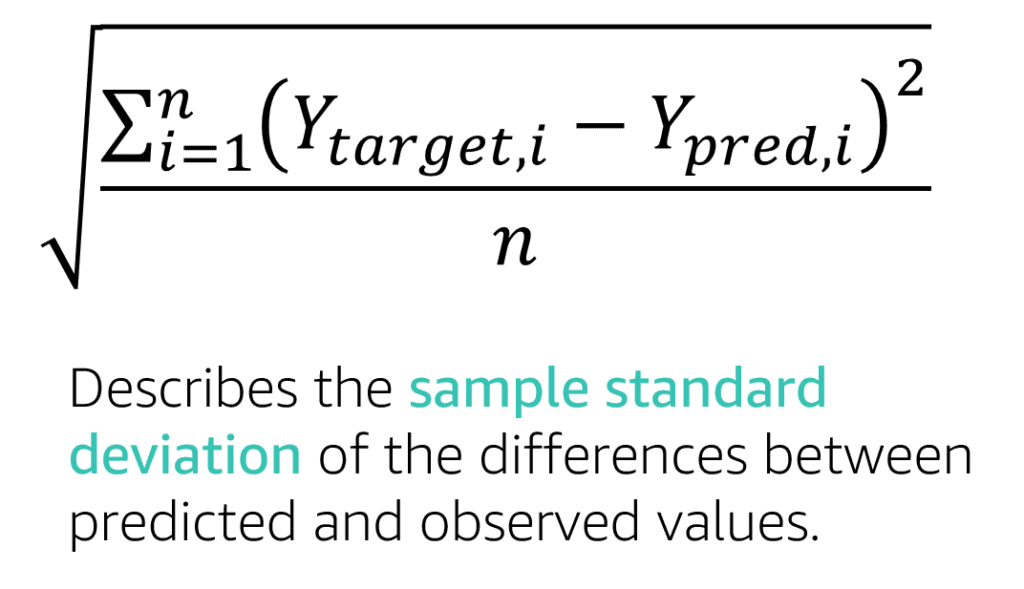

Loss Function and Optimization Techniques

RMSE: Root Mean Square Error

the standard deviation of the differences between the predicted and observed values. for Regression models

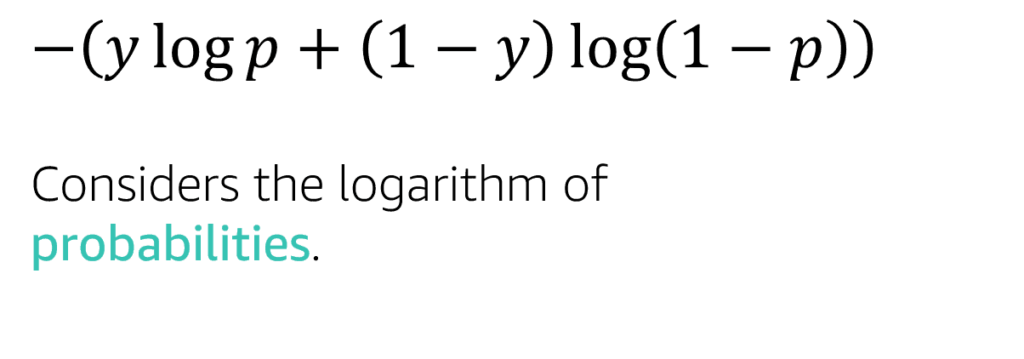

Log Likelihood Loss (cross-entropy)

calculates the logarithm of probabilities. for Binary Classifications

Gradient Descent Optimized Techniques

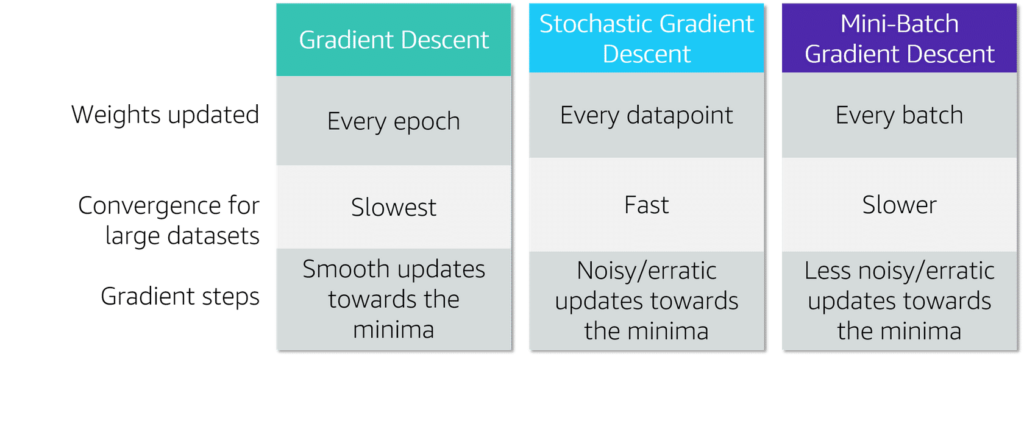

- Gradient Descent (GD): Needs to go through all the data once (Epoch), Slow to find the Minima but fewer steps to reach it.

- Stochastic Gradient Descent(SGD): Updates the parameters for each data point (record), drawback oscillation at different directions

- Mini-Batch Gradient Descent: Uses small batch size to update the parameters, less noisy updates than SGD,

Feature Engineering

Feature Engineering:

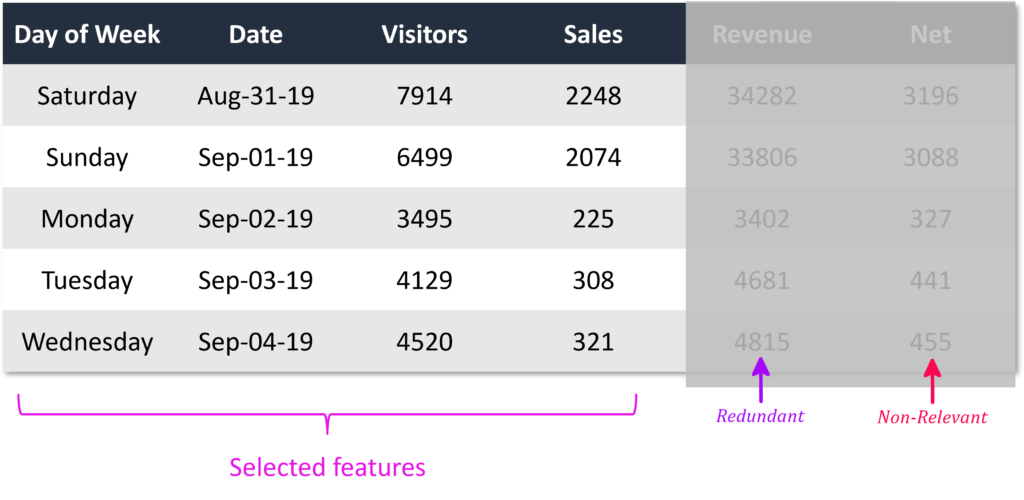

is the process extracting more information from existing data in order to improve model’s prediction power and help the model to learn fast. requires domain knowledge of the data. for example, identify day of the week(Sat/Sun) where sales figures takes a different patternFeatures:

rank of an attribute, help identify the object such as could be numeric such as age, or binary such as car_windshield_present, in NLP Neural Language Processing words, Structured Data PCA Principal Component Analysis or T-SNE T-Stochastic Neighbor Embedding to reduce dimensionalityFeature Distribution:

- Binomial: binary outcome [ Y | N ]

- Poisson: Count of events per period

- Normal (gaussian): Data distributes evenly around mean. (bell shaped curve)

The main components of Feature Engineering:

Feature Extraction

Create new features from existing dataset, reduce dimensionality, for example Extract features from the image to replace raw image pixels, in Natural Language Processing extract features from popular words, excluding articles and prepositions, and in Structured Data is the principal component analysis (PCA) unsupervised technique based on the interrelations between variables or t-distributed stochastic neighbor embedding (T-SNE)

Embedding

Convert high dimensional vectors into low-dimensional space to make it easier to do machine learning with large sparse vector inputs. Embeddings also capture the semantics of the underlying data by placing similar items closer in the low-dimensional space. This makes the features more effective in training downstream models.

Feature Selection

Selecting a subset of features that are most useful to the problem.

Filtering subset of features using scoring methods to rank and choose features such as correlation or importance

Feature Creation and Transformation

Generating features from existing features, such as generating day, month, year from a date, numeric features

logarithmic transformation: smooth data distribution, works on positive non zero values only

- square/cube root: reduces variance , square works on positive values and zero only, quad works on any value.

- square/cube root: reduces variance , square works on positive values and zero only, quad works on any value.

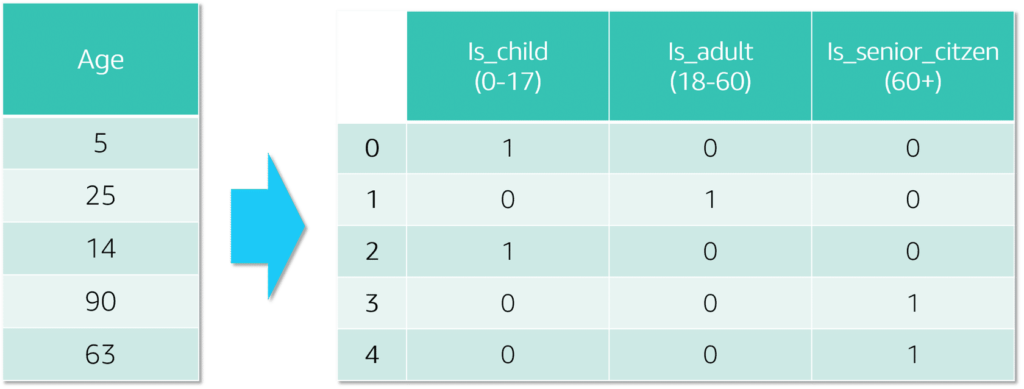

- Binning:=grouping, continous numbers into one group, such as age (child. adult, senior)

- Scaling: Convert values into scale 0 . . 1

:Mean/Variance Optimization (MVO):

reduce the impact of outliers

Blends multiple attributes based on Correlation, Smaller values, reduces impact of outliers, values are mean of 0 and standard deviation of 1MinMax:

Best with small standard deviations

Scale values to 0..1, Robust to small standard deviationMaxAbs:

Divide all data into features by the maximum Absolute Value of that feature

Robust:

Substract the median of the features and divides that by the difference between 75th and 25th Quartile

Minimizes impact of large marginal outliers

- Normalizer: Rescales feature values into a range of [0 .. 1], around mean; mean of zero and variance of 1.

- Scaling: Convert values into scale 0 . . 1

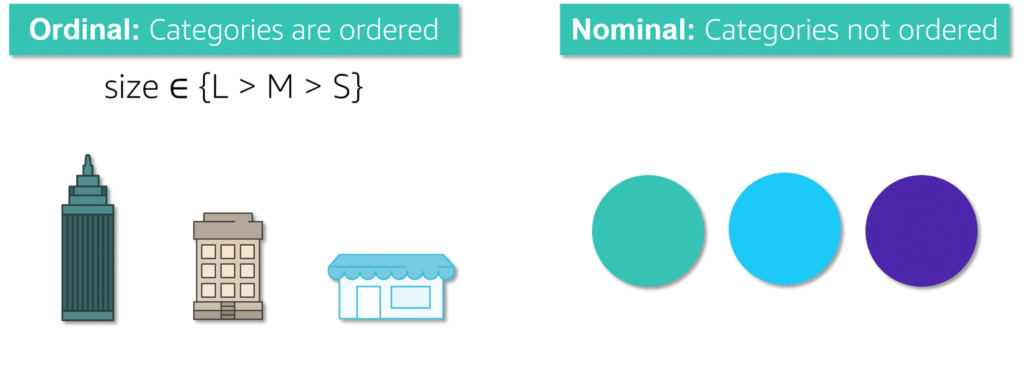

Categorial Data Transformation:

Ordinal

Categories ordered and related such as sizes [ S | M | L ] , distances [ near | far], mapped 1 to 1 ex. S = 1, M=5, L=10

Nominal

Categories not ordered and not related such as colors [ Red | Blue | Green ] , Country [ CA | US | MX . . . ]

One-hot encoding – each type given its own column values [ 0 | 1 ] for matched values, to reduce number of columns group values by similarities for ex. Territory for countriesCartesian

Takes categorial variables or text as input, and produces new features that capture the interaction between input variables.

N-Gram

Takes a text variable as input and produces strings corresponding to sliding a window of (user-configurable)n words, generating output in the process. An n-gram is a sequence of n words: a 2-gram (which we’ll call bigram) is a two-word sequence of words like “please turn”, “turn your”, or ”your homework”, and a 3-gram (a trigram) is a three-word sequence of words like “please turn your”, or “turn your homework”.

Orthogonal Sparse Bigram (OSB)

Slide the window of size n over the text and outputting every pair of words that includes the first word in the window ex. “The quick brown fox” => {(the,quick) (the,brown) (the,fox)}

Bag of words

NLP algorithm, creates token of the input document text and outputs a statistical depiction of the text such as histograms

Term Frequency-Inverse Document Frequency(tf-idf)

Determines how important a word in a document by giving weights to words that are common and less common in the document (just the count), orderd by weights

Encoding Techniques

Used to encode categorial features into numeric representation,

- Ordinal: categories are ordered

- Nominal: Categories are unrelated not ordered

Encoding Techniques

- one-hot-shot Encoding: works on limited number of categories such as day of week, months, etc.

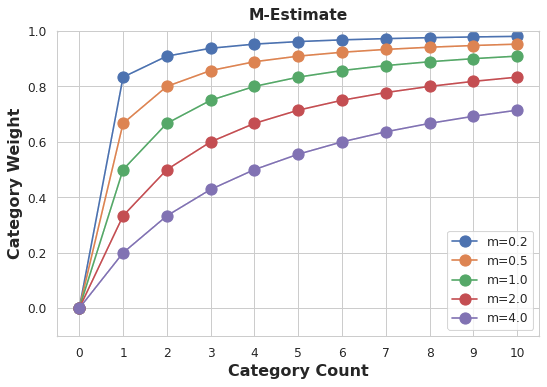

- Target Encoding with smoothing: with mean transform and smoothing, for large number of categories by calculating the average of categories.

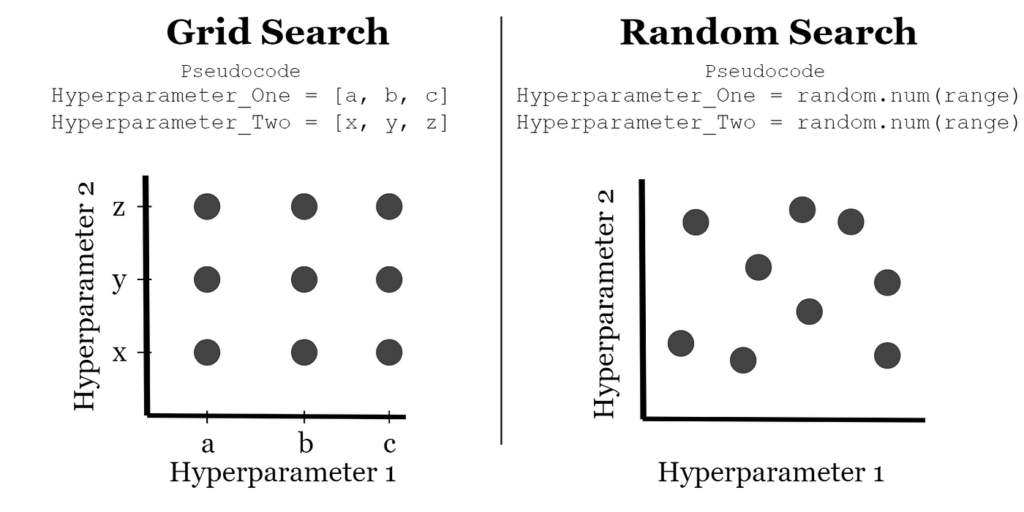

Hyperparameter Tuning

Hyperparameter categories:

Model

Help define the model architecture, such as defining the number of layers in a neural network Examples Filter size, pooling, stride, padding

Optimizer

How the model learns patterns on data Examples: Gradient descent, stochastic gradient descent, momentum

Data

Define attributes of the data itself, such as cropping or resizing of images in the input data Useful for small/homogeneous datasets

Search Hyperparameters

GridSearch

Trained and scored for hyperparameters at equal distance from each other; can train and score for every hyperparameter combination. Thorough but inefficient.

RandomSearch

Replaces the exhaustive enumeration of all combinations by selecting them randomly. Generalizes to continuous and mixed spaces. Explores many more values than grid search could for continuous hyperparameters. runs concurrent training jobs without impacting performance of the search.

Bayesian Search

The tool makes guesses about best hyperparameter combinations. Uses regressions to refine those combinations to find the best combinations for next round.

Good for exploring unknown areas.

AWS Machine Learning Devices

- AWS DeepLens: vision systems provides programmable video camera, use Model Quantization to speed the process without compromising accuracy

- AWS DeepRace: simulation to reinforcement learning

- AWS DeepComposer: MIDI keyboard music with Generative Adversarial Networks (GANs)

- AWS Panorama: Adds vision appliactions into IP camera setup

References

Workshops and Tutorials

- Build ML models using SageMaker Studio Notebooks Code Repository

- The Machine Learning Pipeline on AWS

- Understanding the ML Pipeline on AWS –retired

- Practical Data Science with Amazon SageMaker

- MLOps Engineering on AWS

- Amazon SageMaker Studio for Data Scientists

- Building Streaming Data Analytics Solutions on AWS

- AWS Discovery Day: Machine Learning Basics

Tools

- RStudio: IDE host, share, develop, deploy ML projects

- Jupyter Notebooks

- SageMaker Studio

- SageMaker Prebuilt containers

- SageMaker Algorithms

- SageMaker Clarify

- SageMaker Model Tracking

- AWS Lake Formation

- SageMaker Canvas

Amazon SageMaker Components

Notebooks:

uses Jupyter Notebooks, Open source to share notebooks documents. Frameworks are prebuilt optimized components for conducting ML work in the notebooks. Jupyter Notebook is a virtual machine instances to host notebooks in specific Conda( Open source Anaconda) managed environment or sparkmagic. A notebook, is a collection of documents, code, reports, data visualization in house framework, A Document structure is a collection of mixed cells [code | Markdown | Raw]

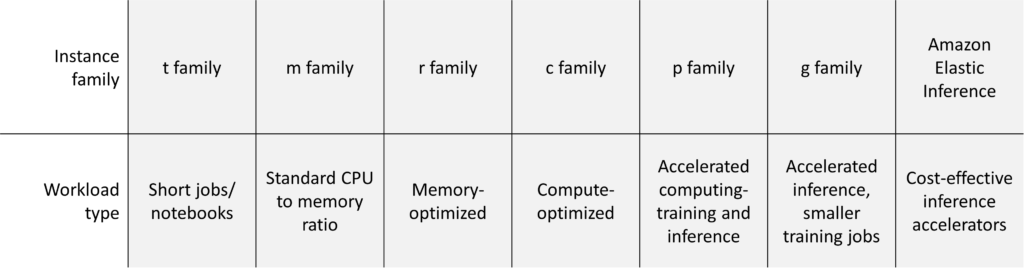

Create Notebook instances in SageMaker Console, uses a kernel (the supported framework)Instance Types:

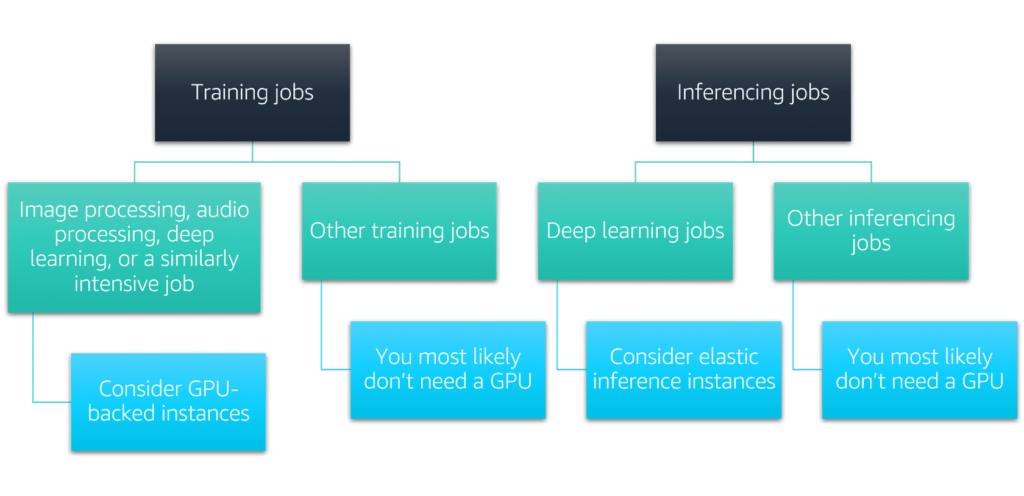

SageMaker workloads Notebook, Training, and inference (apply prediction in production)

- t family: ideal for Notebooks

- m, r, c, p family: ideal for traditional ML training

- g family: ideal for Deep Learning DL

- Elastic inferencia: ideal for inference

Lifecycle Configuration

Data Visualization:

Histograms, cross-correlations, and scatter plots.

Model Selection:

Options of algorithms [Built-in algorithms, script on frameworks, Subscribed from AWS marketplace, Bring your own], Hyperparameter optimization and tuning (Automatically)

Ground Truth:

Uses combination of a selected human workforce [ public:=Amazon Mechanical Turk | staff | vendor ] and machine learning to create labeled datasets. Data Types [ Image | Text | Video — files | frames ], labeling techniques [ Chaining labeling jobs | label verification and adjustment | Batches | Annotation consolidation (bounding box, Semantic Segmentation, Name entity, output manifest, Mechanical Turk) ], Built-in Tasks [ Bounding Box | Image Classification | Semantic Segmentation ]

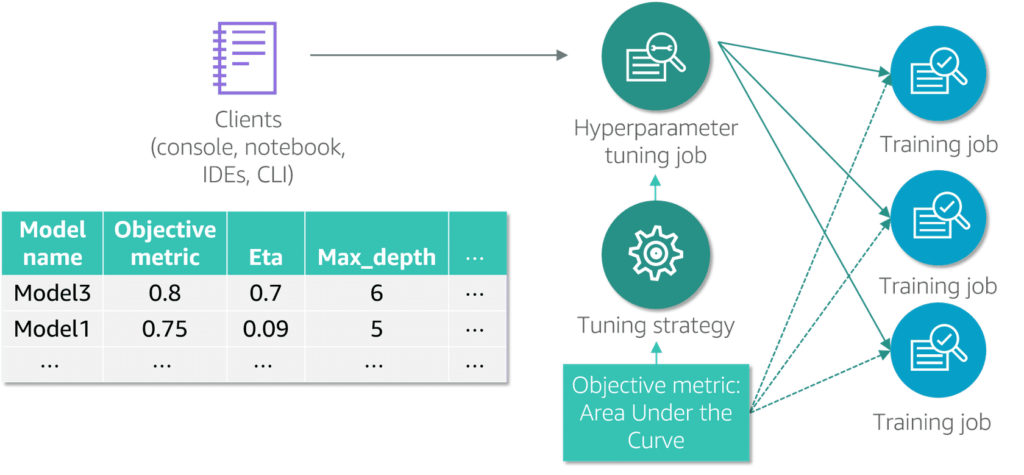

Hyperparameter auto tuning:

Amazon SageMaker finds the best version of a model by running mini training jobs on a dataset using the algorithm and range of hyperparameters, use gaussian regression to choose the most fit hyperparameter. uses Bayesian optimization to balance hyperparameter states and explore more hyperparameter values. Best values with small ranges.

Deployment:

- One Prediction at a time

- All predictions at once

Deployment in SageMaker

- SageMaker is one option to run and deploy Machine Learning models and inference. Fully managed end to end service to manage Machine Learning deployment, auto scaling, model hosting, HTTPS endpoints.

- Unmanaged ML deployment requires creating AMI, Launch compute instances EC2, ECS or EKS containers, Configure auto scaling.

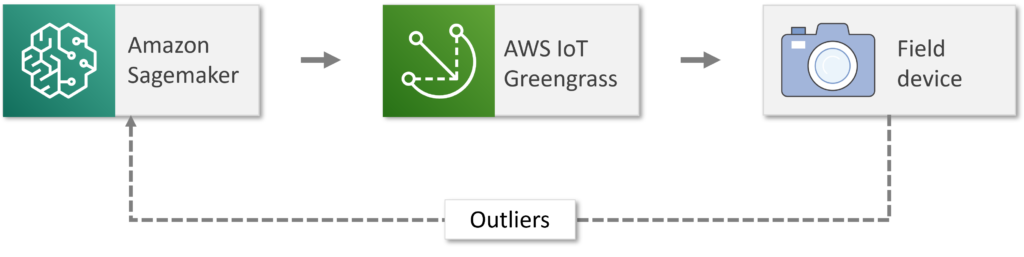

- ML on the Edge: on Edge devices with less power, such as security cams

- AWS IoT Greengrass: for ML models built on (SageMaker, DL AMI, DL containers)

- Amazon SageMaker Neo: Compiler converts code to common format, and Runtime optimized for the underlying hardware to speedup inference process. processors from ARM, NVIDIA, Xilinx, and Texas Instruments

Steps to deploy and host:

Create the model

CreateModelAPI, Define storage and name the model for hosting and running batch inference jobs

Create HTTPS Endpoint

CreateEndpointConfigAPI to create HTTPS endpoint, set configurations (e.g. prod variations for each model) [ instance type, initial count, initial weight], A SageMaker RunTime VPC endpoint is used for

invoke_endpointAPI calls.Deploy an HTTPS endpoint

CreateEndpoint API, specify endpoint configuration, model name, and tags

Inference Options

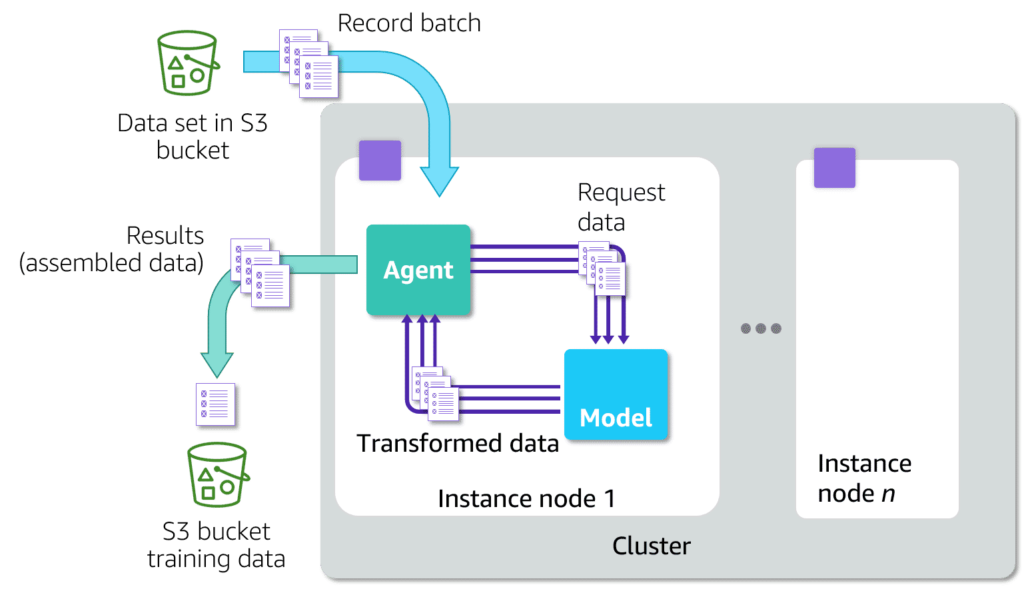

Batch Transform:

offline, large datasets, does not require persistent endpoint

or associating input records with inference to support interpretation of results.

- Real-Time: live predictions, Sustained traffic, low latency, consistent performance, low latency, Multi-model/Multi-container, Secure HTTPS endpoints, one datapoint at a time, used for immediate results, real-time endpoint, use Apache Spark Streaming

- Serverless: AutoScale, auto provision resources, cold-start, unpredicted traffic patterns, CPU only, used for simple standard option inferences with intermitted traffic patterns, serverless endpoint

- Asynchronous: Queue based, near real-time, <1GB payloads, longer runtime, used for time less sensitive moderate payloads, asynchronous endpoint

- Batch: large datasets, Higherthroughput, Event/Schedule based, does not require associating input records with inference to support interpretation of results, used for offline processing not time sensitive

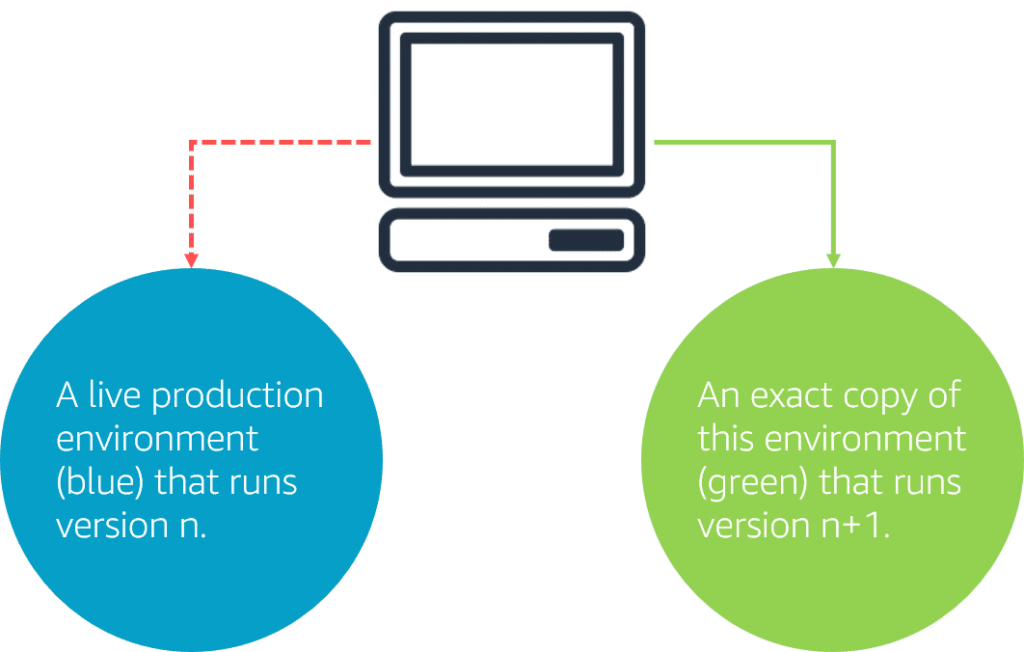

Blue/Green Deployment

Two identical production environments, test the traffic on the new deployment, revert in case of issues., use UpdateEndpoint() to switch

Canary and A/B Deployment

Deploy new identical environment for small subset of users 20%? (canary deployment) or 50/50 users (A/B deployment), use the traffic-shifting mode, test the traffic on the new deployment, revert in case of issues or gradually swift traffic to new environment or adjust the weights in a single step until a single model is processing all of the live traffic if success.

- All at once: single step to shift the traffic 100%

- Canady: two steps c% then 100%

- Linear: fixed portion of traffic shift on specific intervals p% x n to 100%

Offline testing: historic, uses alpha endpoint,

Online testing with live data: use A/B Testing model,

Deploy multiple variants of the model on same HTTPS endpoint with one endpoint configuration that describes all variants of the model.

Retrieval Augmented Generation (RAG)

Retrieve data from outside a foundation model and augment your prompts by adding the relevant retrieved data in context. QuickSight Feature.

Amazon SageMaker inference pipeline

Process data same way in training,

Monitoring the deployment

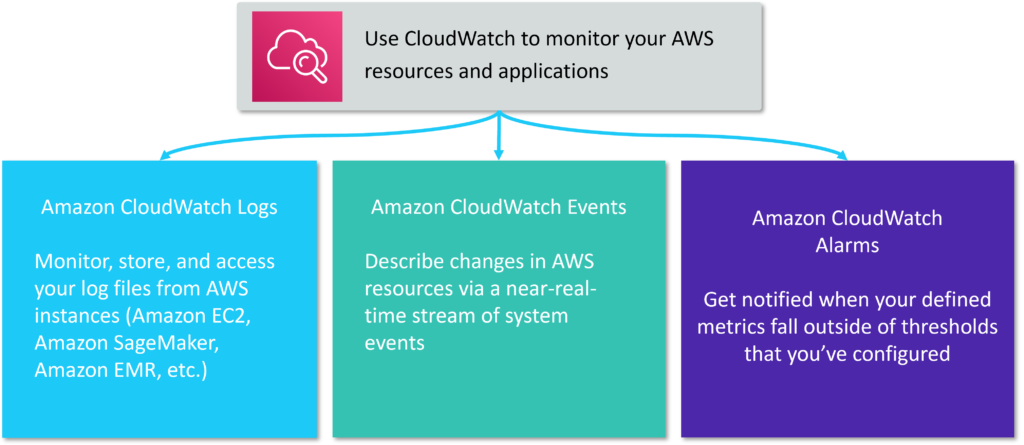

Monitoring Categories

- Business Goals and KPIs: used to understand how the model meets your business goals, User experience

- Hosting infrastructure: Performance and cost of infrastructure (latency, utilization, scaling)

- Model Performance: Detecting Drifts for divergence of data

Model Monotoring

- Data Quality

- Model Quality: Metrics accuracy F1.Score

- Bias and Drift

- Feature attribution drift ( explainability )

Monitoring Tools integration (CloudWatch, EventBridge, and CloudTrail)

Production readiness testing comparison strategies:

- Shadow Testing: 2 models side by side, share same endpoint or separate, Shadow receives sampling percentage without responding, key metrics with DataCapture, compare logs

- A/B Testing: production and candidate variants, multiple models single endpoint, CloudWatch monitoring

Data Security and Privacy

Tokenization

The process of replacing actual sensitive data elements with non-sensitive data elements that have no exploitable value for data security purposes. Security-sensitive applications use tokenization to replace sensitive data, such as personally identifiable information (PII) or protected health information (PHI), with tokens to reduce security risks.

VPC endpoints

Allows access to SageMaker resources without traversing the public internet

Concept Drift

Use Cases for Bias Detection

- Compliance: Regulation, fairness opportunities

- Internal reporting: Auditing

- Operational excellence: Maintenance

- Customer service: Decisions regarding applications

Solutions:

- Amazon SageMaker Clarify: ML lifecycle Data Prep, Process and Analyze, Deploy, and Monitor, uses Model Bias detection and model explainability

- Monitoring daily/weekly/monthly (Cron jobs/ Event Bridge)

- Built-in rules to detect data drift

- Automate corrective actions for alerts

- Periodic Retraining (Bias or change feature attributions)

Practical Data Science with Amazon SageMaker

- Lab 2: Training the Model

- Lab 4: Model Deployment

Work with SageMaker

Create a Domain:

A domain, includes EFS, VPCs, Users, Security policies, home directories for each user

Create Users:

Create a user, Permission role (create), customize permissions, SageMaker Studio Settings,

Create a Notebook:

from the user, or studio console, Create Notebook, Configure (Image(framework) ex. Data Science 3, Instance Type, Kernel ex. Python3, Permissions, Network, Git)

Open Jupyter Notebook:

Notebook editor, contains a menu to manage (File, Edit, View, Insert (cells), Cell ([run | markdown | Raw]), Kernel (execution engine), Widgets (Notebook state), and Help (links to usage and frameworks)), toolbar for shortcut icons, and an editor to edit cells.

SageMaker Studio

Web-Based IDE for ML, is a hub for all SageMaker tools(Jupyter notebooks, ML models, frameworks, Data Science preparations,- Data Wrangler and others) used in the ML Pipeline

SageMaker Algorithms

- built-in models are pre-trained, but can be customized

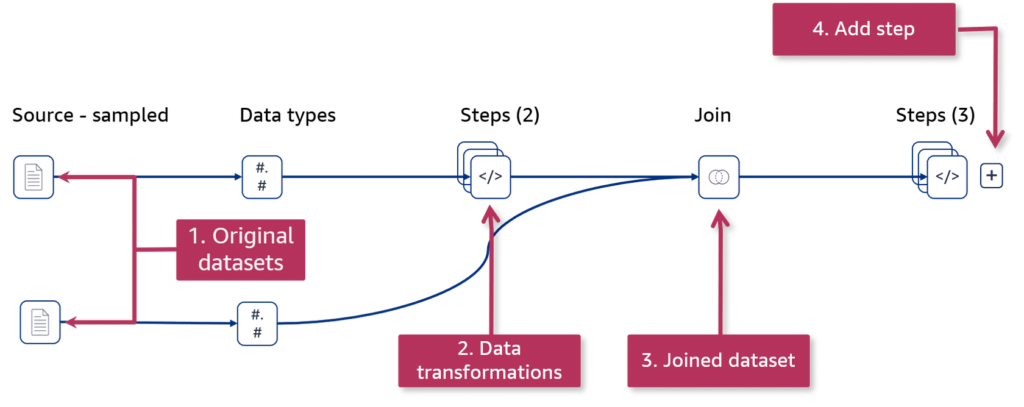

Data Preprocessing SageMaker Data Wrangler

- Select data from multiple data sources and semi-structured data formats.

- Automatically verify data quality and detect abnormalities.

- Understand data with visualization templates.

- Transform data with 300+ built-in transformations plus the ability to author custom transformations.

- Quickly estimate ML model accuracy and diagnose issues before models are deployed into production.

- Automate ML data preparation workflows.

- Use SageMaker Clarify to detect bias with Total Vairation Distance (TVD) to measure the difference between distinct features with different facets in the dataset, or Proportions of Outcomes (DPO) to measure the proportions of the group membership that hold different views.

- Custom Transforms: [ Python (Pandas), Python (PySpark), and SQL (PySpark SQL) ]

Training Jobs

- Compute Resources: ML AMI enabled,

- Block Storage: S3 / EFS/ FSx for luster

Booster Parameters

alpha: L1 regulation term on weights. Increasing this value makes models more conservative.

eta: Step size shrinkage used in updates to prevent overfitting. After each boosting step, you can directly get the weights of new features. The eta parameter actually shrinks the feature weights to make the boosting rocess more conservative.

max_dept: Maximum depth of a tree. Increasing this value makes the model more complex and likely to be overfit.

min_child_weight: Minimum sum of instance weight (hessian) needed in a child. If the tree partition step results in a leaf node with the sum of instance weight less than min_child_weght, the building process gives up further partitioning.

num_round: The number of rounds (trees) used for boosting. Increasing the trees can increase the model accuracy but increases the risk of overfitting.

Learning Parameters

Objective: Defines the loss function to be minimized. There are objectives specific to regression problems, and binary and multiple classification problems. for example binary:logistic, binary:logitraw, and binary:hinge.

Eval_metric: Tied to an objective and is used for validation data. for example auc.

SageMaker Automatic Model Tuning (AMT)

Static hyperparameters: don’t change

target objective metric: to be optimized

Optimization options:

- Grid Search

- Random Search

- Bayesian Search

- Hyperband: for Neural Network based algorithms NN

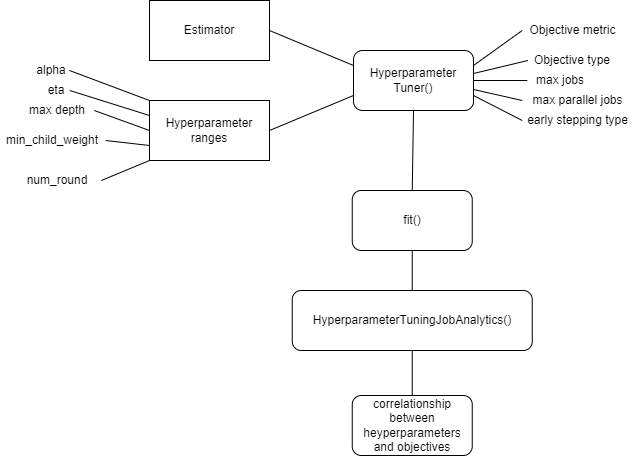

HyperparameterTuner()

- estimator: An estimator object that has been initialized with the required configuration. There does not need to be a training job associated with this instance. extendable to [ Transformer:= Batch | Predictor:= endpoint | MultiDataModel:= multi-models]

- objective_metric_name: Name of the metric for evaluating training jobs.

- hyperparameter_ranges: Dictionary of parameter ranges. These parameter ranges can be one of three types: Continuous, Integer, or Categorical. The keys of the dictionary are the names of the hyperparameter, and the values are the appropriate parameter range class to represent the range.

- objective_type: The type of the objective metric for evaluating training jobs. This value can be either ‘Minimize’ or ‘Maximize’ (default: ‘Maximize’).

- max_jobs: Maximum total number of training jobs to start for the hyperparameter tuning job. The default value is unspecified for the ‘Grid’ strategy, and the default value is 1 for all other strategies (default: None).

- max_parallel_jobs: Maximum number of parallel training jobs to start (default: 1).

- early_stopping_type: Specifies whether early stopping is enabled for the job. Can be either ‘Auto’ or ‘Off’ (default: ‘Off’). If set to ‘Off’, early stopping will not be attempted. If set to ‘Auto’, early stopping of some training jobs might happen, but is not guaranteed to.

Hyperparameter Tuning

- f1 : measure model accuracy FP,FN

- map (mean average precision)

- ndcg (Normalized Discounted Cumulative Gain)

- rmse (Root mean square error): amplify errors

- mae (mean Absolute error) : disregard +/- errors

- ssd (Sum of the squared Distances):

- msd (Mean Squared Distances):

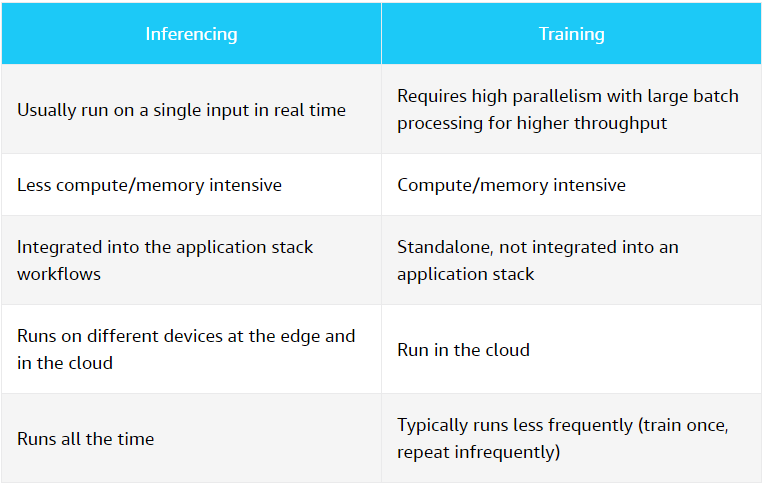

Inferencing vs. training

Inferences requires autoscaling

Machine Learning instance types for the purpose

Amazon Elastic Inferentia: Low cost, GPU powered acceleration Fp16 performance for 16 infrencia accelerators gen 1 per EC2 Infr1 and 12 infrencia accelerator gen 2 for EC2 Infr2 (190 TFLOPS)

Standalone GPU instances, suitable for model training, oversized for inferences.

Factors to determine the right instance:

- Target Latency SLA

- Constraints

- Start small and size up as needed

- Use Fp16 for lower latency and higher throughput

MLOps

A combination of Machine LEarning and Operations – combines people, technology, and processes to deliver collaborative ML solutions.

MLOps requires the integration of software development, operations, data engineering, and data science.

MLOps ensures operation ability of ML workloads, by Automating and Orchestrating the data CI/CD pipelines, Tools include AWS Glue (ETL), SageMaker pipelines, Step Functions, and Apache Airflow.

Use separate pipelines for training and deployments.

Deployment of model creates new model version to the staging environment, pending approval, use CodePipeline to approve and deploy to production endpoint

Clustering Algorithm, groups data into different clusters based on similarities in features.

Clustering Algorithm, groups data into different clusters based on similarities in features.

Anomaly Detection:

Anomaly Detection:

Each layer summarizes and feeds information to the next layer, ultimately producing final output

Each layer summarizes and feeds information to the next layer, ultimately producing final output

Deep Learning Computer Vision

Deep Learning Computer Vision